AI Revolution: how Artificial General Intelligence will change our lives

What components do we need to unlock a new wave of economic growth, and what is the current state of the progress.

Intro

Have you ever wondered how much tech companies have changed our lives?

Let's begin with a fun example. Here's a screenshot from a Thanksgiving episode of the final season of "Friends," which aired about twenty years ago. I bet it doesn't look much like your dinner tables, does it? Try to count all the smartphones you see here:

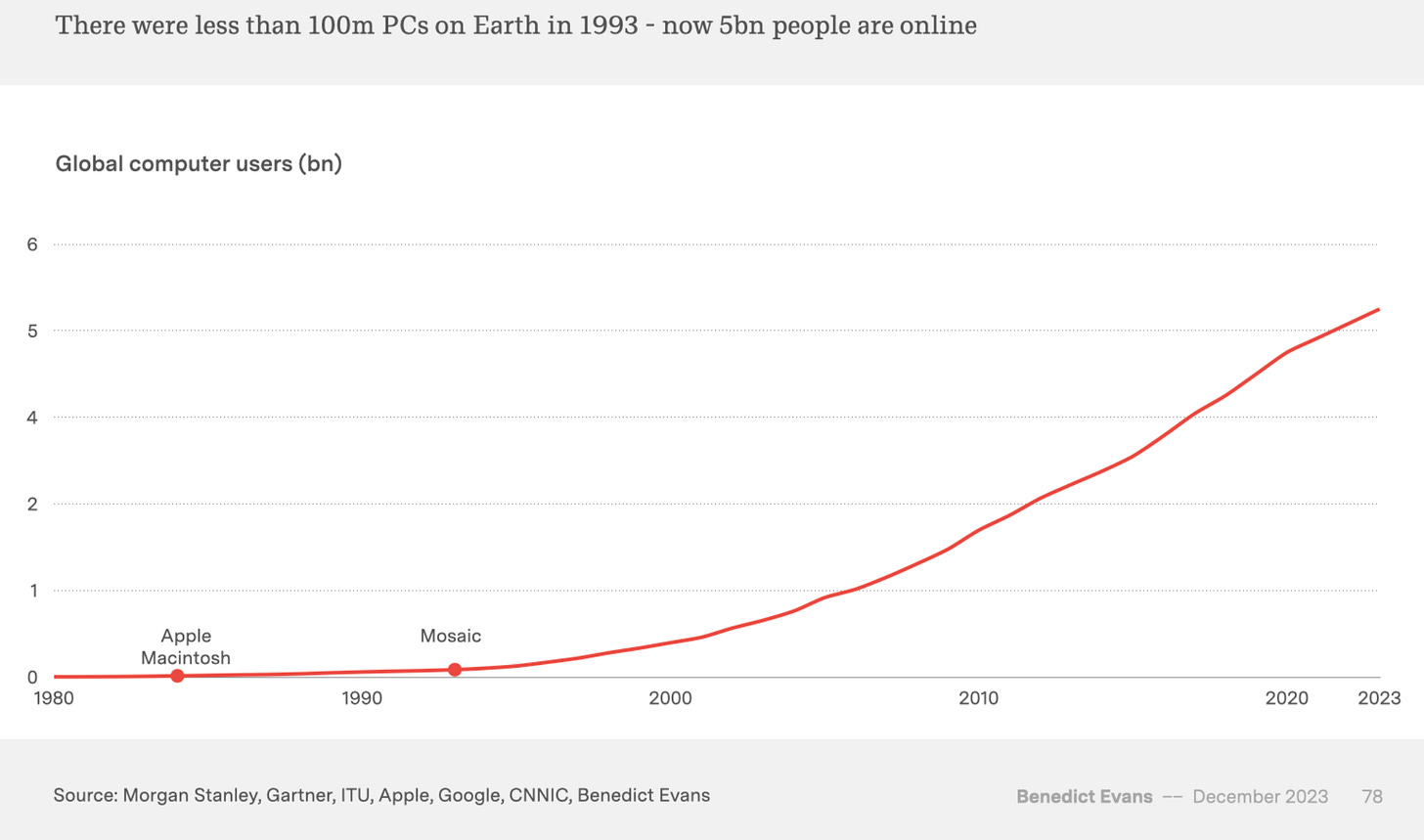

Zero, right? Smartphones are everywhere now; they're with us all the time. I don't know about you, but lately, when I watch old movies, I get so confused. How did people manage their lives back then? Nowadays, nearly every adult in the world has some way to connect:

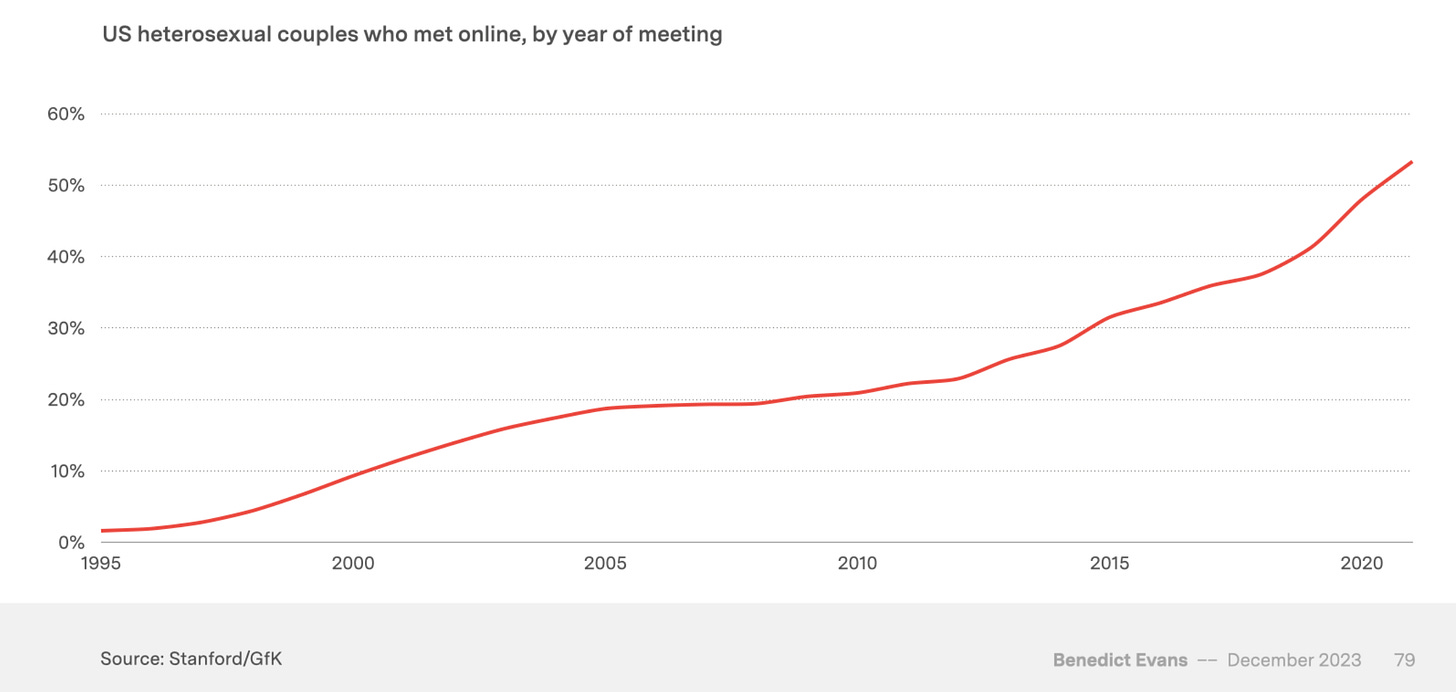

It's not just about not having phones at the dinner table. It's about how computers have altered our lifestyle and how we solve problems. You could argue whether it's for better or worse, but life is definitely different now:

Social networks have made us more connected. Remote work has saved us hours of daily commuting. News spreads faster, and more people have the chance to create content. Netflix, Spotify, and TikTok have changed how we spend our time. Search engines, ads, Amazon, cloud computing, and Stripe have transformed how we shop and grow our businesses.

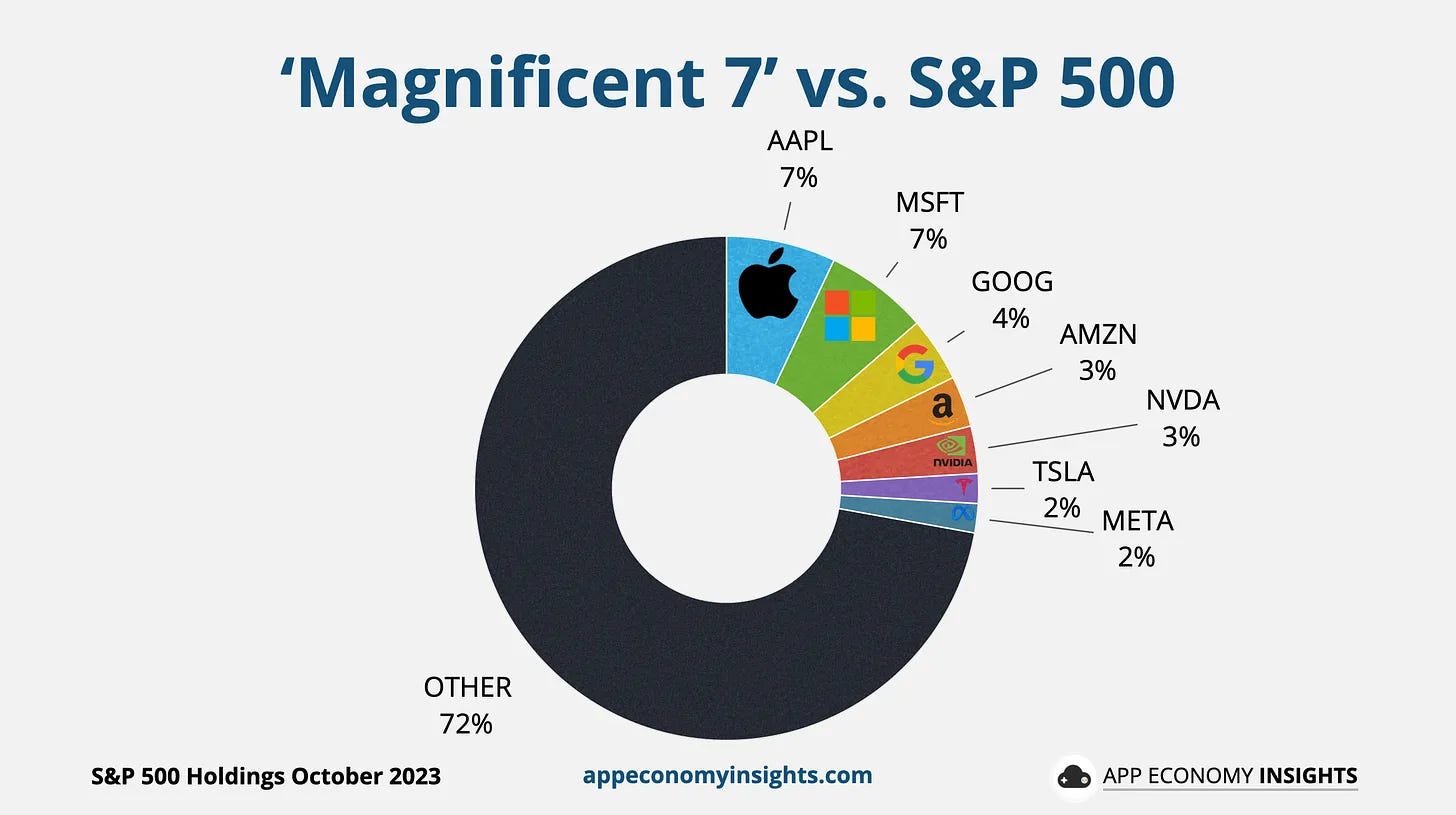

And from an economic standpoint, consider the size of BigTech companies compared to others:

(Source)

The seven companies combined have a market capitalization of $12 trillion in 2023. That's about a quarter of the entire US market! It feels like we're in a silly sci-fi speculation where everything seems odd compared to the recent past.

I recently heard rumors from a reliable source that Microsoft plans to invest $100 billion in its data centers. To grasp how big this number is, check out the list of Megaprojects I've picked below. (Note that I chose the ones I like; there are many more on the Wikipedia list.)

It's remarkable that a tech company is spending money like a government on a big national project. If you doubt because there's no announcement, how about this: TSMC is building three chip factories in Arizona. They're investing a huge $65 billion! This year, we expect two fabs to operate at full capacity.

This is a precursor of a new iteration of "Friends" becoming a heartwarming flashback to the past situation. Watching some stuff like "Ted Lasso" or "Don't Look Up" might seem strange in ten years because of how much technology will change.

Big tech is all in on AI, investing lots of resources into infrastructure to improve the models. Wouldn't it be fantastic to get direct insights into what these companies are thinking, rather than relying on rumors or secondhand reports?

Welp, I've got some good news for you!

It looks like the CEOs of these companies are in love with podcasting. The sheer number of interviews they do sometimes feels like a glitch in the matrix. Picture a three-hour chat with someone who's great at talking, knows their stuff, and is passionate about sharing their vision. Why not have one every month or two, right?

Podcasts are incredible. You've got these billionaires sitting there, chatting for hours about the most interesting things that await us.

Seriously, this is a freaking goldmine! After listening to this episode with the head of the most incredible team in the world, I was inspired to write this article. It's not about Mark, but Demis Hassabis, a British AI researcher and neuroscientist. He's the co-founder of DeepMind, a leading AI research lab that Google acquired in 2014. Today, he's responsible for all Google's AI projects.

So whats's in there? The main theme is that we're in for a massive technological advance that will change everything with the arrival of AGI (Artificial General Intelligence). I'll divide the article into three major parts and try to answer one important question in each part.

Chapter 1. What can modern technology do and why is it not enough?

Chapter 2. What will it take to unlock the technologies of the future?

Chapter 3. How can you stay on top of things and not go crazy?

The podcast that insppired me is hosted by the talented Dwarkesh Patel. What I love most about him is his ability to steer conversations with thoughtful follow-up questions. In this case, it gets us a rich source of useful information about Google's future plans. I've broken down the talk into short videos and added my thoughts and context to create a complete story. Enjoy!

Table of content

Chapter 1.

What can modern technology do and why is it not enough?

Meet DeepMind, the one of the coolest companies in the world

There is a possibility for a revolution in biotech

AI did not much of an impact so far

Scaling models is a sure way to get more capabilities

Scaling isn't the only option

The first idea is to incorporate multimodality

The second idea is to give the model agency

The third idea is to combine multimodality and agency into robotics

Chapter 1 summary

Chapter 2.

What will it take to unlock the technologies of the future?

A well-trained model can't do much if it doesn't know how to plan.

DeepMind uses games to learn planning skills

Planning has greatly improved in the next generation of the model

DeepMind continues to experiment with planning

Can existing systems move science forward?

What does an AGI recipe look like?

Can we trust a model that is too complex to understand?

Chapter 2 summary

Chapter 3.

How can you stay on top of things and not go crazy?

We now live in a very intenese world

There have been periods like this before in history — what can we learn from them?

What can we expect this time and how is the new period different?

What is the current plan so far?

Takeaways

Chapter 1. What can modern technology do and why is it not enough?

1.1 - Meet DeepMind, the one of the coolest companies in the world

DeepMind is one of the leading AI labs and has the most ambitious mission I've ever seen:

Their mission is to solve intelligence to solve all our problems. Pretty cool, huh?

These aren't just empty words; they're actually making great progress towards this goal. DeepMind became part of Google 10 years ago, but kept its research agenda and works independently.

I'd like to show you some examples of their amazing work, but it's tough. They've done so much. They've aided doctors in spotting eye conditions, found millions of new materials, and created human-like artificial voices. Seriously, try clicking "eye conditionsload more" on their research blog until you get to the end – it takes a while!

I want to talk about my favorite: AlphaFold. This model has cracked a biological problem that has baffled scientists for 50 years. Here's a great explanation:

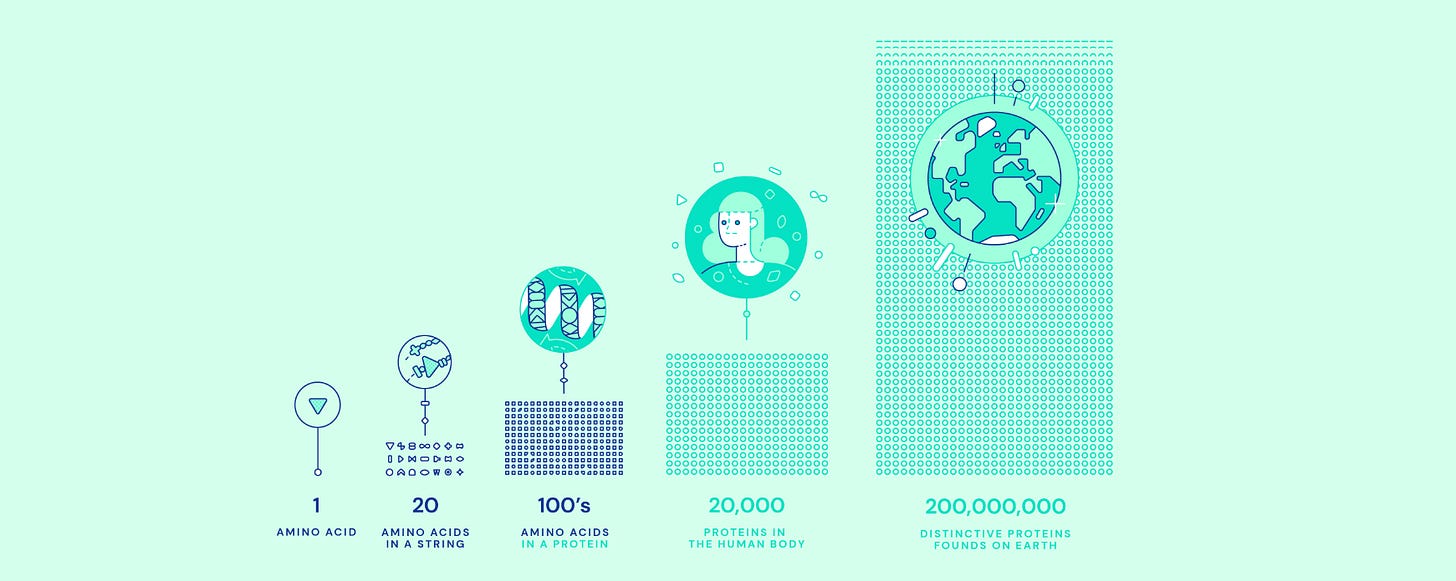

Scientists named this problem protein folding when they discovered DNA sequencing in the late 1970s. For 30 years, sequencing DNA involved a lot of manual work, until new automated methods made it cheaper and faster. Today we know the genetic code for more than 200 million proteins, and every year we find tens of millions more.

But here's the catch. The genetic code is a complex blueprint of a protein and doesn't say much on its own. Just knowing the code won't tell you what the protein does. [Simplifying] That's because its physical 3D shape determines its functions. You can figure out the shape from the code, but it's super hard.

The best known experimental method to find out the shape of a protein takes a year and costs $120,000. With this and all other methods, we've only found out the shapes of about 0.1% of all known proteins. We're lagging behind because new proteins are being found faster than we can study them. Moreover, not all structures can be found using these methods at all.

AlphaFold has given us an open-sourced database with shapes for 200 million proteins. The predictions aren't always perfect, but they're about 90% accurate on average. That's pretty close to what experimental methods can achieve.

1.2 - There is a possibility for a revolution in biotech

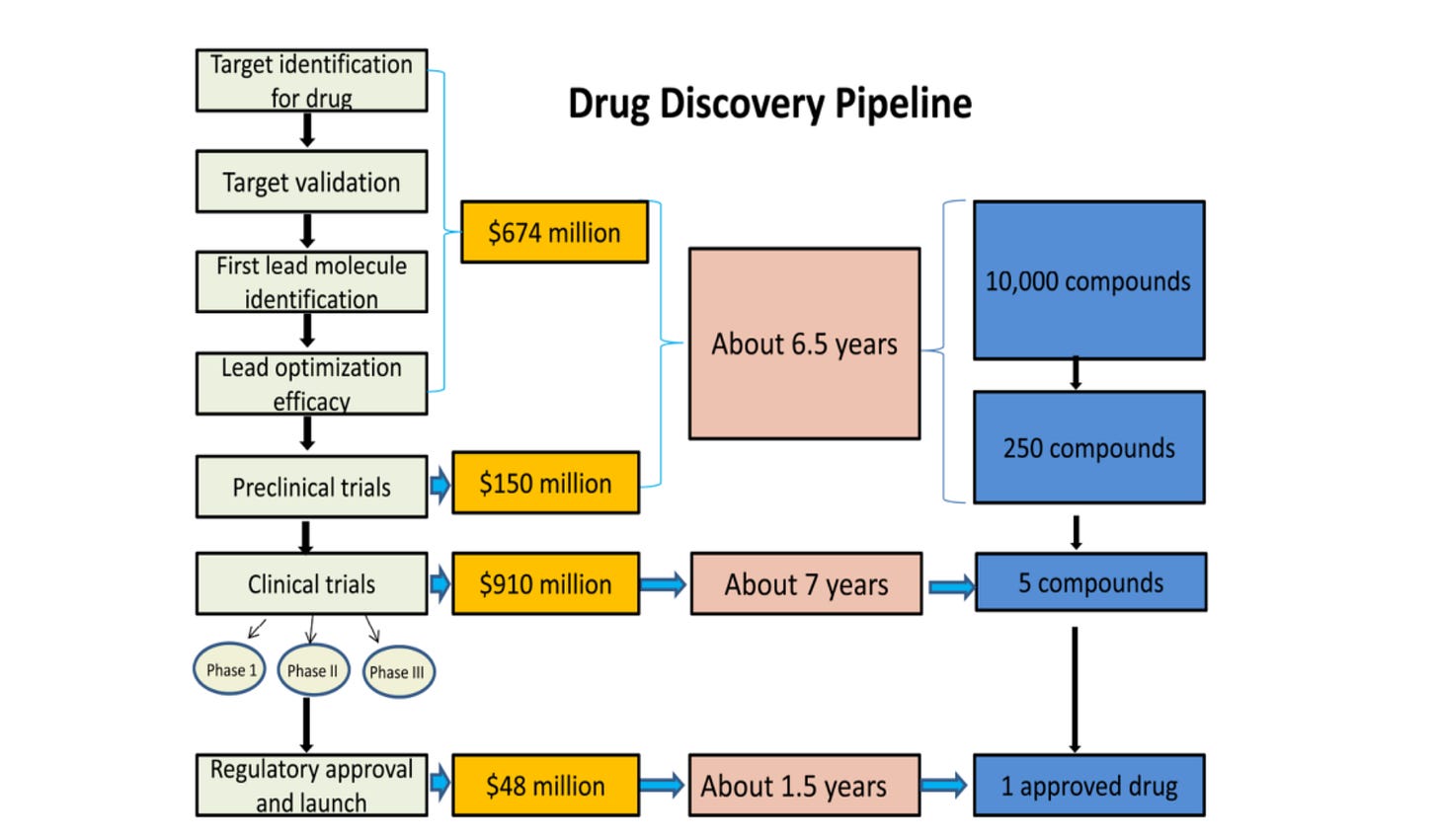

AlphaFold could change the way we discover drugs and understand diseases. Instead of trial and error, we could precisely design exactly what we need. The ability to build like engineers has been a longtime dream of biologists. It's promising because our current way of finding drugs is not very efficient. Just look at the drug discovery pipeline:

(Source. Data is at least 10 years old, but I think it's still relevant.)

It usually takes around 15 years of research, testing, and regulatory compliance to bring a new drug to market. Plus an investment of over $1 billion. It's a long and expensive journey with lots of manual wet-lab work and significant scientific risks.

The cherry on top is that even approved drugs don't always work. Antibiotics usually work well against bacterial infections, with success rates over 80%. Antidepressants help with depression about 50-70% of the time. And so on. This meta-analysis found that only 11 out of 17 common treatments make a difference that patients would notice. Have you ever taken headache pills and they just didn't work? Like, WTF? I'm happy when they do the job, but aren't meds supposed to be reliable?

DeepMind is highlighting many projects where scientists are using AlphaFold. Today's malaria vaccines, for example, are only partially effective. This is because the parasites can change their appearance and evade the immune system. This is why over 500,000 people still die of malaria every year. AlphaFold has significantly increased our knowledge of an important protein that has been a major roadblock to success. This breakthrough has removed a long-standing obstacle to creating a successful vaccine.

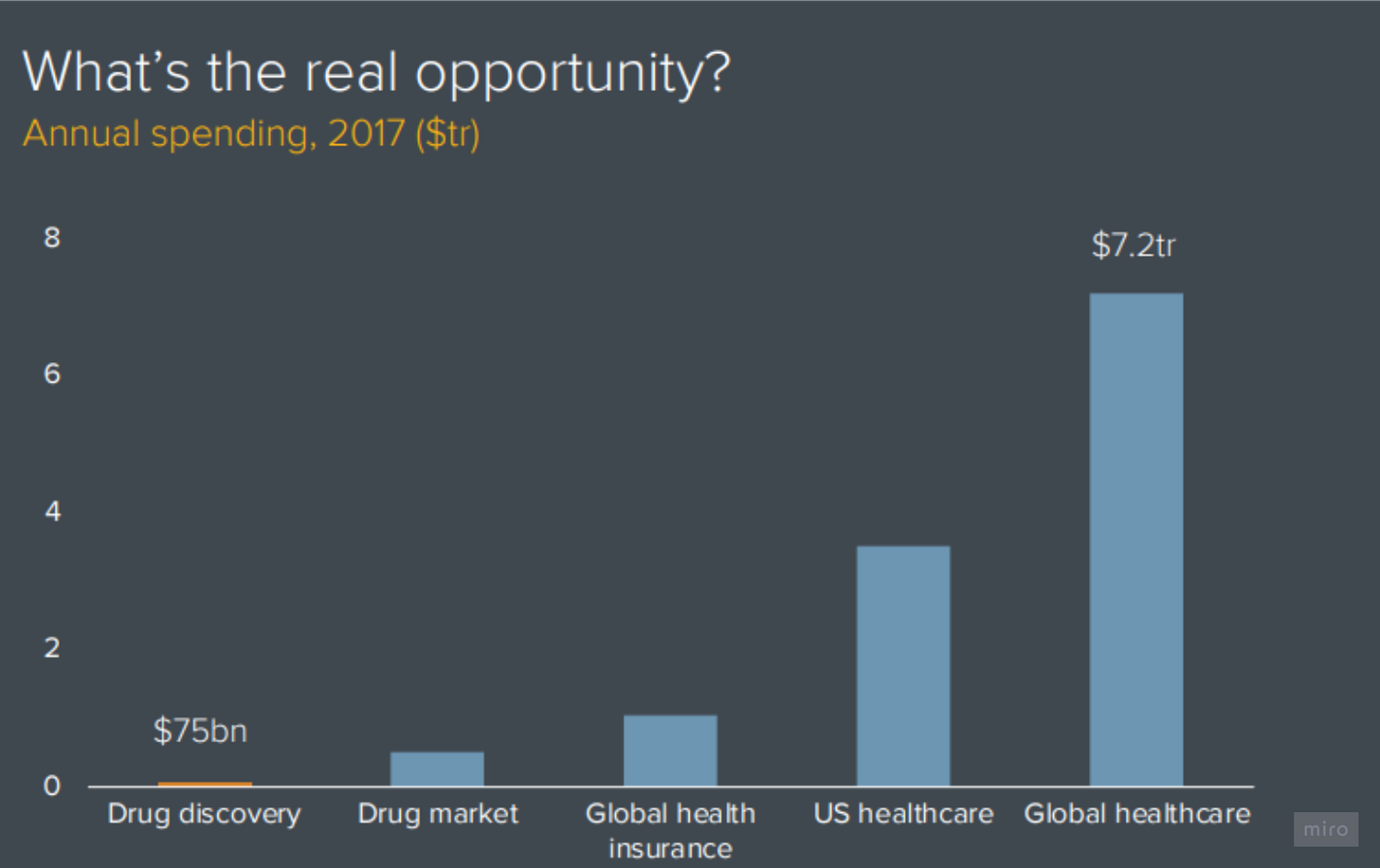

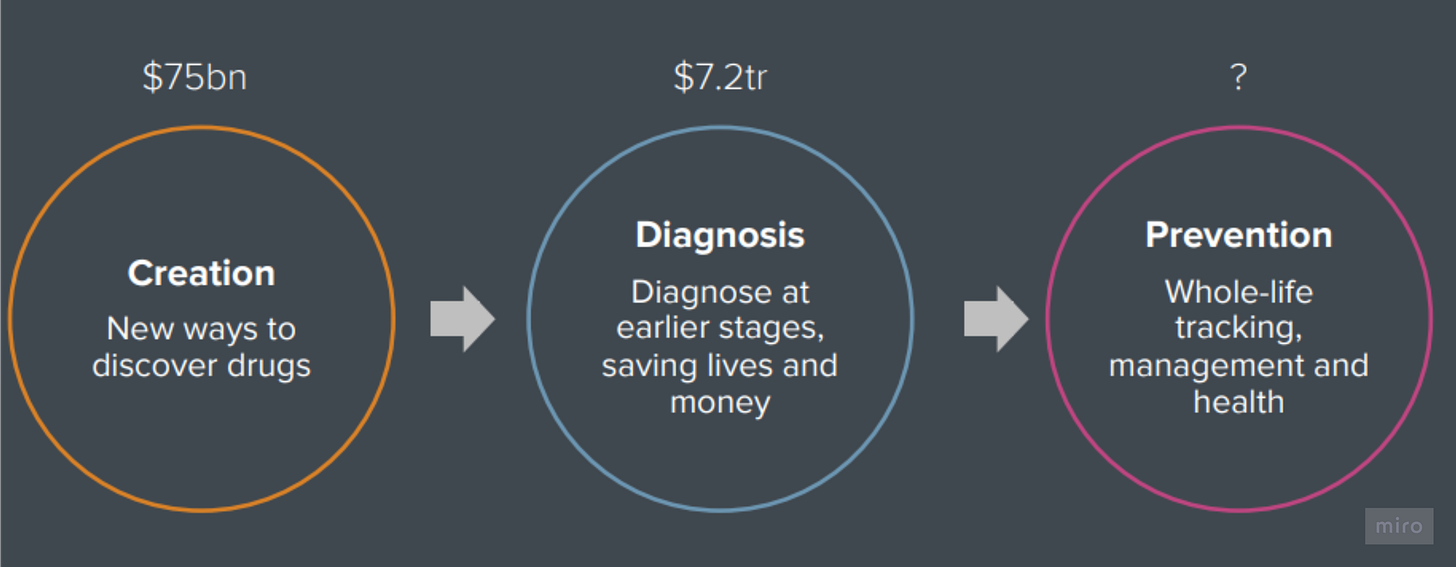

Sounds cool, doesn't it? But all these projects have one thing in common: they are all still in development. I haven't seen any AlphaFold-inspired drugs in clinical trials yet. Biotech takes time. But one day, engineering biology will change the way we diagnose, treat, and manage diseases. In his presentation, "The End of the Beginning," Ben Evans shows us the bigger picture:

Drug discovery may not seem like a big deal in a market worth many trillions of dollars. That means AI could have an even bigger impact by helping to develop new treatments and personalized medicine. But there's more to it. Ben raises an interesting point: How much do we lose by being sick in the first place?

Probably a lot. Lets talk more about the current and potential impact of AI.

1.3 - AI did not much of an impact so far

Here is finally the first video from the podcast!

Demis believes we're only at the beginning and that today's models hardly come close to what is possible. Ben Evans has shown something similar with his example from drug discovery ➝ preventive medicine. This is just one area, but Demis suggests there are many others.

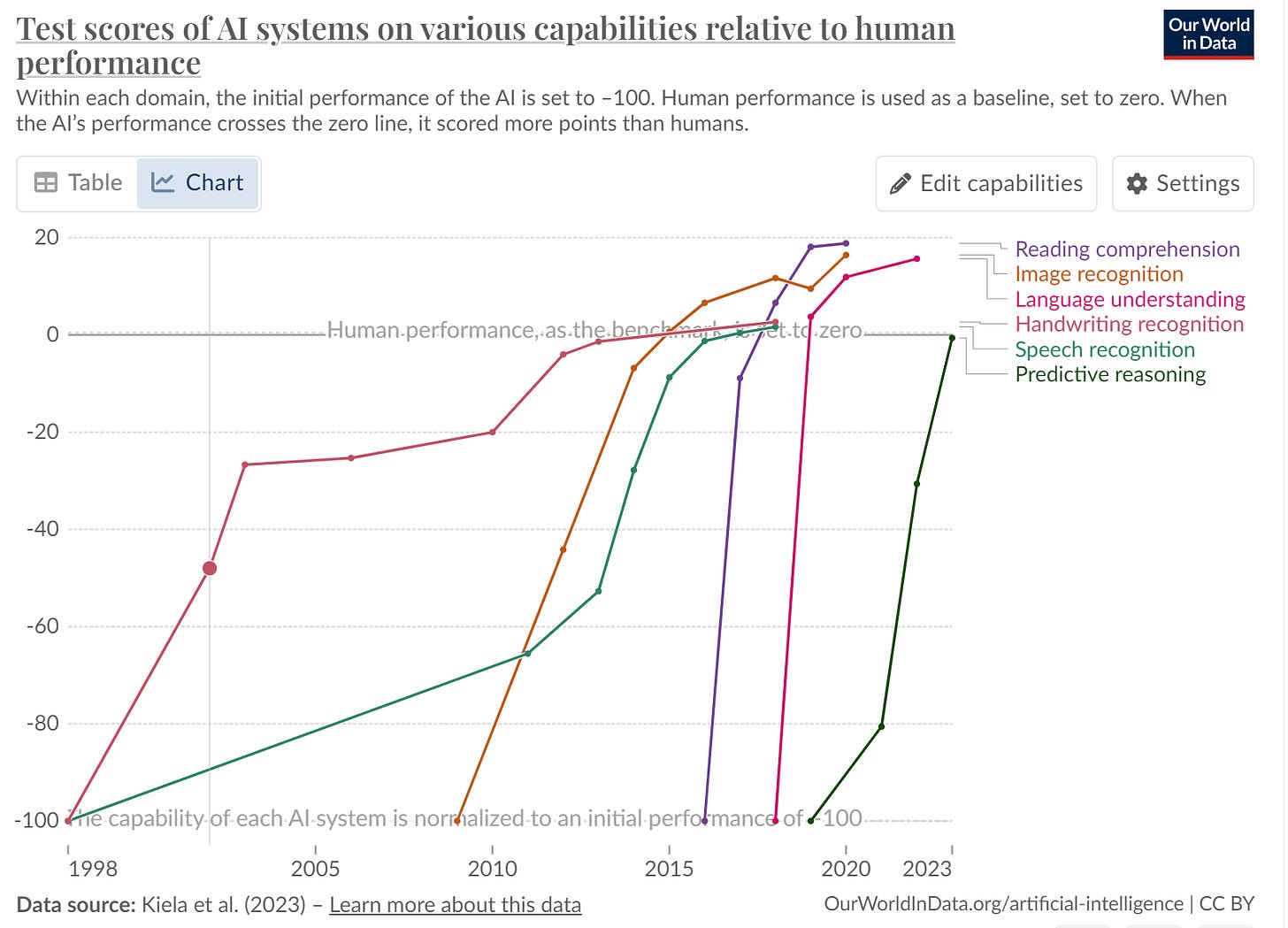

Over the last ten years, we've created Narrow AI systems that can do specific tasks that normally require human intelligence. They can't handle everything at once. But, we've made them to work in different areas one by one. They can now recognize speech, understand images and comprehend reading and language. And they can do all this just as well as humans:

(Source)

This has made things like the Tesla Autopilot possible. It can perceive its surroundings and keep learning to make better decisions.

Now we have moved on to creating models that can do many things. They can summarize text, answer questions or explain memes within a single model. This is called general capabilities, which help a model work well even in new situations for which it hasn't been trained.

And this has paid off with the real-world impact in the form of job automation. Recently, Klarna shared that their OpenAI-powered system is doing a better job than their old customer support. They has invested $2-3 millions and now expect to see about $40 million in profit this year.

Deloitte, PwC, and McKinsey's studies suggest that automation or restructuring could impact about 40% of jobs in the next 5-10 years. They also predict a massive $15.7 trillion increase in global GDP by 2030. But I'd like to point out that many economic studies on this topic are not very well-designed or accurate. So I'll make a somewhat generic conclusion: we'll see more workforce automation in the coming years.

As AI automates more and more valuable tasks, it might boost our economy ten times in this century. That's not a sure thing, but the balance of evidence leans towards it being more likely than not.

Growth theory says that labor is the only economic input that doesn't grow with the economy. However, if automation becomes affordable (~$15,000 per agent), people will invest in digital workers. This will boost growth and lead to more automation. Even partial automation can make a big difference. In time, we can expect an orders of magnitude more workforce working in its own AI economy.

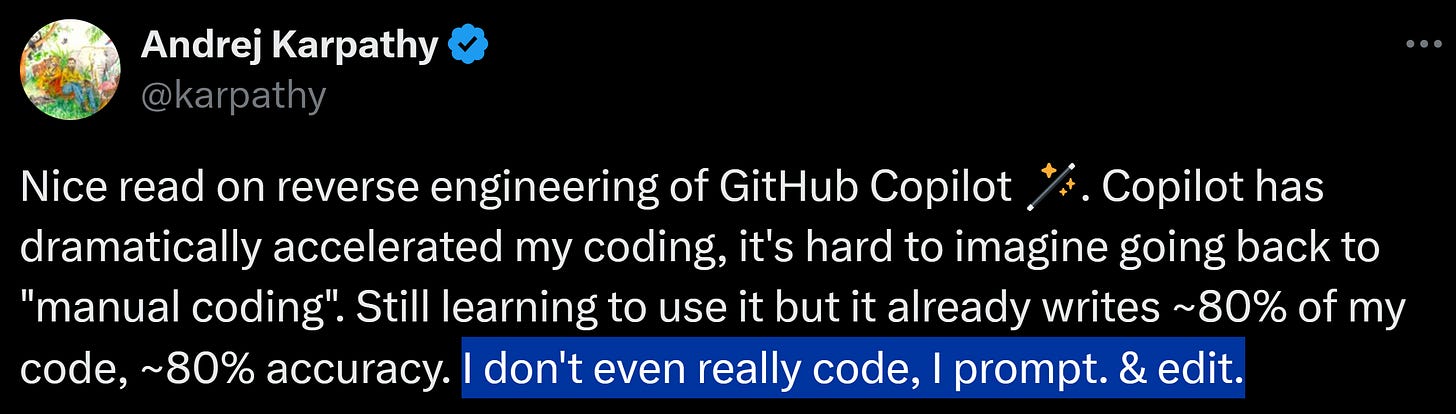

Jobs in customer service, back office or sales are currently the most vulnerable to automation. But who says it stops there?

Most of the economic value lies in the most challenging tasks, like the last 20% in Andrej Karpathy's example. Or take Ben Evans' idea about healthcare. Imagine an AI working as a qualified medical assistant. Or even replacing your doctor and do everything from diagnosing you to planning your treatment.

Such an AI system would need to know a patient's symptoms, past health, and test results (such as MRI or X-ray images) to figure out health problems and suggest how to treat them. The AI has to mix and make sense of lots of different data. It needs to get how the human body and sickness work and think about what might be wrong. It needs to look at how well treatments work and their bad effects, and keep up with new health studies and how individual person responses.

Sounds difficult. As Demis said — AI needs more capabilities to capture this value: planning, search, memory, reliability, and so on.

1.4 - Scaling models is a sure way to get more capabilities

The scaling laws say that making models bigger leads to a predictable increase in performance, right? But there are nuances:

Google has just introduced a new family of models called Gemini. These models are computationally efficient, and Google also has the most advanced computing power.

Those are the plans for 2024, and Meta probably already has ~150,000 H100 GPUs. Mark mentioned that they plan to integrate an infrastructure of 600,000 H100s by the end of the year. To understand the scale, GPT-4 was rumored to be trained on the equivalent of ~9,000 H100s. That means Meta's setup is massively larger — about 66 times bigger. And Google supposedly has even more! Of course, these are just rumors; there is no official info to confirm this.

Alright, let's talk about scaling. Google definitely has the resources to scale up. But Demis points out that it's not that simple. With each scaling step, you have to solve new logistical problems. You can't just stack more layers; you have to adjust the scaling recipe each time. The scaling laws predict better performance on metrics, but that doesn't always translate to better capabilities. Some ideas work at specific scales, and others don't.

So scaling is a difficult task, even if you have a lot of resources. And you don't want to scale up more than one order of magnitude per attempt.

1.5 - Scaling isn't the only option

Scaling works amazingly well because we first found an architecture that scales well. Modern neural network architectures are actually quite simple. We should focus half of our efforts on testing the limits of scaling and the other half on developing new things. The most promising innovations are those that enable grounding.

Grounding refers to the ability of an AI system to connect its results to real-world information or context. Grounding helps it to produce more accurate results by anchoring them to verifiable external data. This makes the model's reasoning more understandable from a human perspective.

Pre-training a language model on lots of text is a basic form of grounding. By exposing the model to many patterns of language use, it learns how people use words. This foundation allows it to understand and generate human-like text. As it trains, the model figures out patterns by itself; we don't have to invent new architectures for it to get better. But for the model to know which patterns are good, we need to give it some feedback.

A more complex form of grounding is Reinforcement Learning from Human Feedback (RLHF). GPT-4 used it in the final training phase. A selected group of specialists rated the responses to help it understand what people prefer. For example, people don't appreciate it when someone misleads them or lies to them.

Techniques such as RAG, fine-tuning, or in-context learning also provide grounding. However, they're still based on text. Yann LeCun, Chief AI Scientist at Meta, believes that text alone is probably not enough:

He talks about the Moravec Paradox. This shows that some things are easy for humans but difficult for machines. We humans are simply better at using fewer examples to learn things like seeing, moving, and thinking. AI models today often rely on brute- forcing to get results, which is an inefficient way to do things. The more complex the task at hand, the more this inefficiency matters. Demis has some thoughts on this topic:

We're approaching the limit of our current progress. What should we do if we can't supply enough text for highly advanced topics? We have a few ideas in the works.

1.6 - The first idea is to incorporate multimodality

Multimodality means handling audio, images, video in addition to text. The use of all these types of info makes learning more sample-efficient and gives better generalization.

For instance, to explain what a "dog" is, it's better to show a video than writing it all out. The model got stronger at dealing with noise or gaps by really getting the context and the relationships between the different modalities. For example, if a dog barks but doesn't wag its tail, it's likely on alert, not in a playful mood. There's also this idea of spatial representation or knowing where things are in space. The model figures out where the edges of objects are and how they relate to each other, e.g. "above" or "next to".

The SORA model by OpenAI is a recent example. Its creators wanted the model to recognize how an object stands out from its surroundings. They also wanted it to track this object continuously through a video's frames. Since a video is just images linked over time, it's impressive if the object remains the same even as time changes. SORA can do that:

1.7 - The second idea is to give the model agency

The best way to understand what a dog is? Play with it and enjoy some time together! Agency means getting feedback from your surroundings by trying out your ideas. Same as multimodality — it helps to solve complex tasks with fewer examples.

For instance, to understand the actions or intentions of others, you need a basic "Theory of Mind". The most effective approach for a model to get something like that is to test its ideas in a simulation. In this way, it can adjust its thoughts if they aren't quite right.

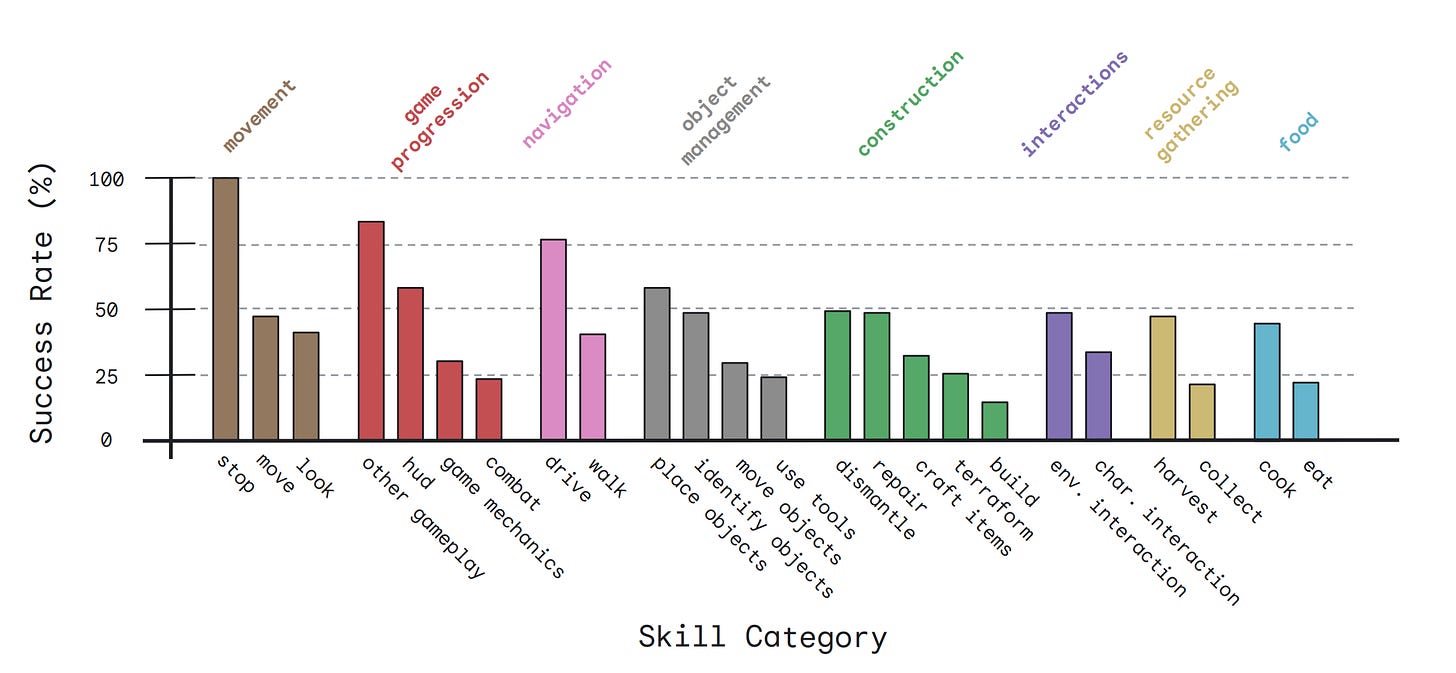

Look at SIMA, a research project that uses modern video games as a sandbox environment. This model learns to navigate a 3D world and perform tasks using only two things: the visual elements of the game and a text description of the goal. It was tested with 600 simple skills, ranging from moving around to interacting with objects.

On average, humans have a success rate of proud 60% in all tasks. Soon AI will be playing games for us — how cool is that!

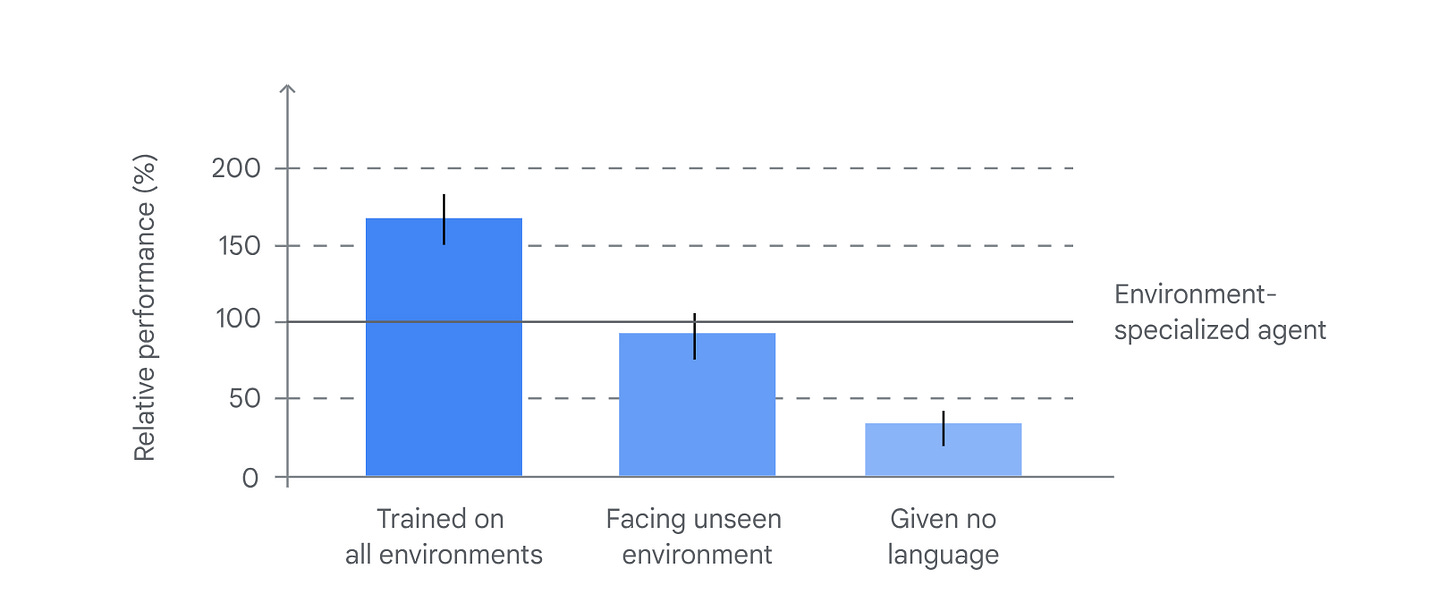

The key takeaway is that an agent that has been trained to play multiple games performs better than one that has been trained to play just one game. It's an environment-specialized agent at the image below. Learning more broadly makes you generally smarter:

The authors also showed that learning a language and understanding environments boost each other. Even if a task doesn't require language, learning language skills can lead to helpful, broad concepts that make learning faster. SIMA isn't about complete agency, like comparing your mental map to the actual world, but it's a start in that direction.

1.8 - The third idea is to combine multimodality and agency into robotics

Robotics involves letting the model to physically interact with the real world. This is a more intense version of the earlier ideas. But it has bigger consequences when mistakes happen and there is often not enough good data for training.

Robotics shows us the Moravec paradox perfectly. A robot must process info from sensors, know where it is, and act quickly. Check out what the RT-1 can do:

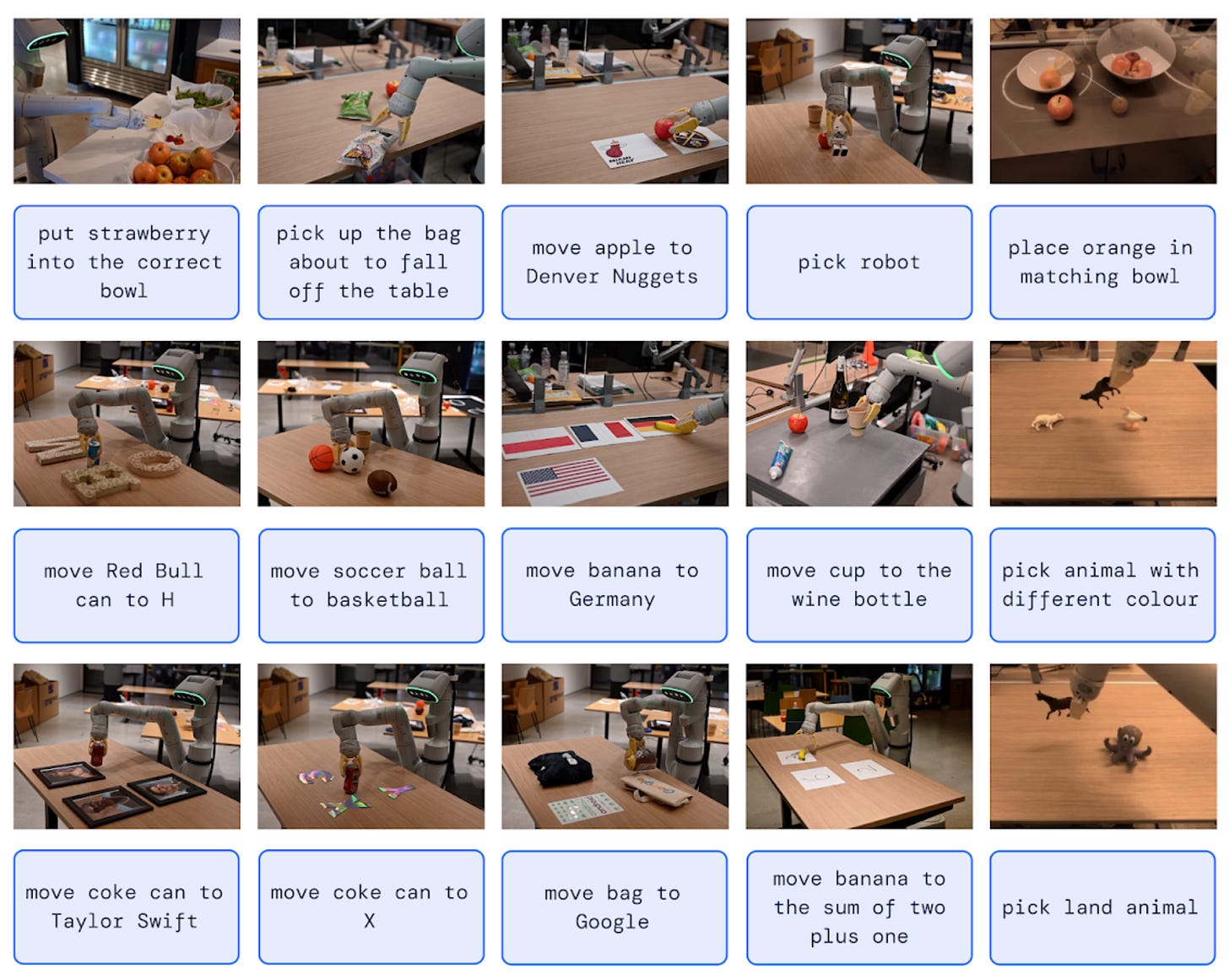

The Robotic Transformer (RT-1) understands the connection between tasks and objects. So if you give it a task, it can figure out what to do and use the right object for it.

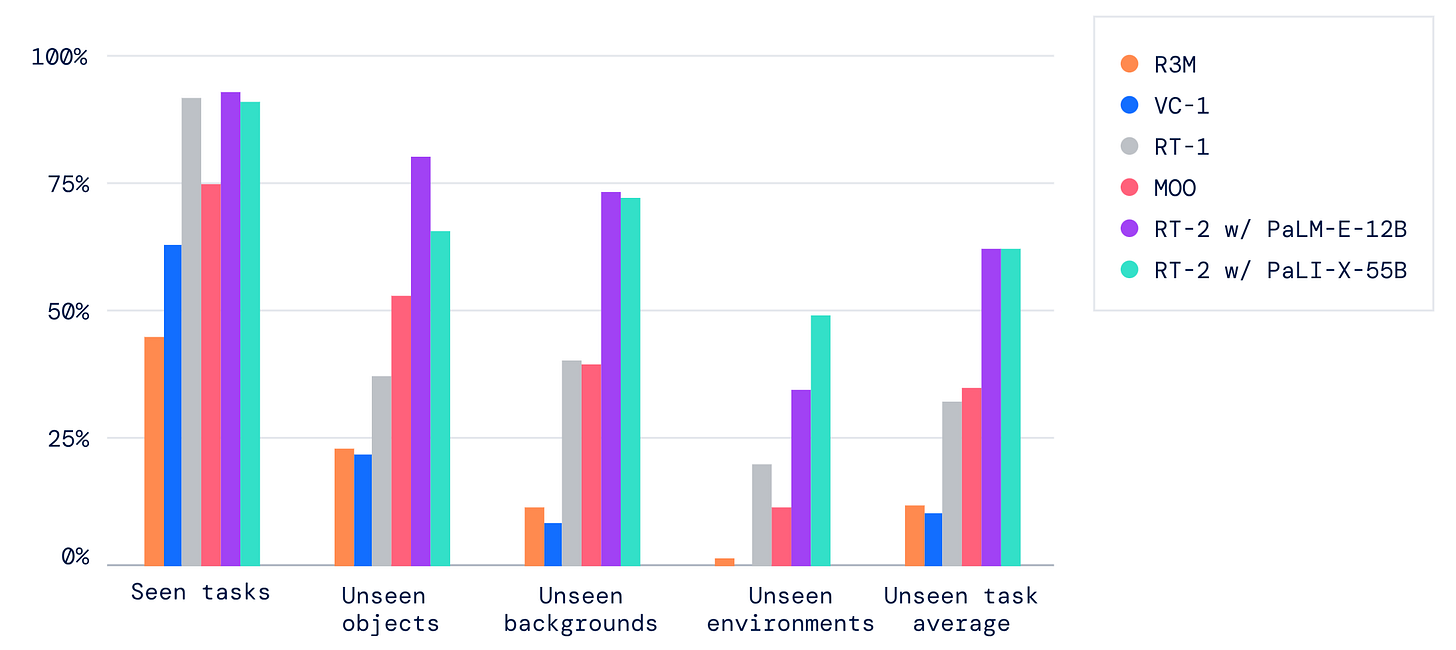

The new model, RT-2, has learned from the data of the RT-1 robots. It handles about 700 tasks with 90% accuracy. It can also perform new tasks and work in situations for which it hasn't been trained:

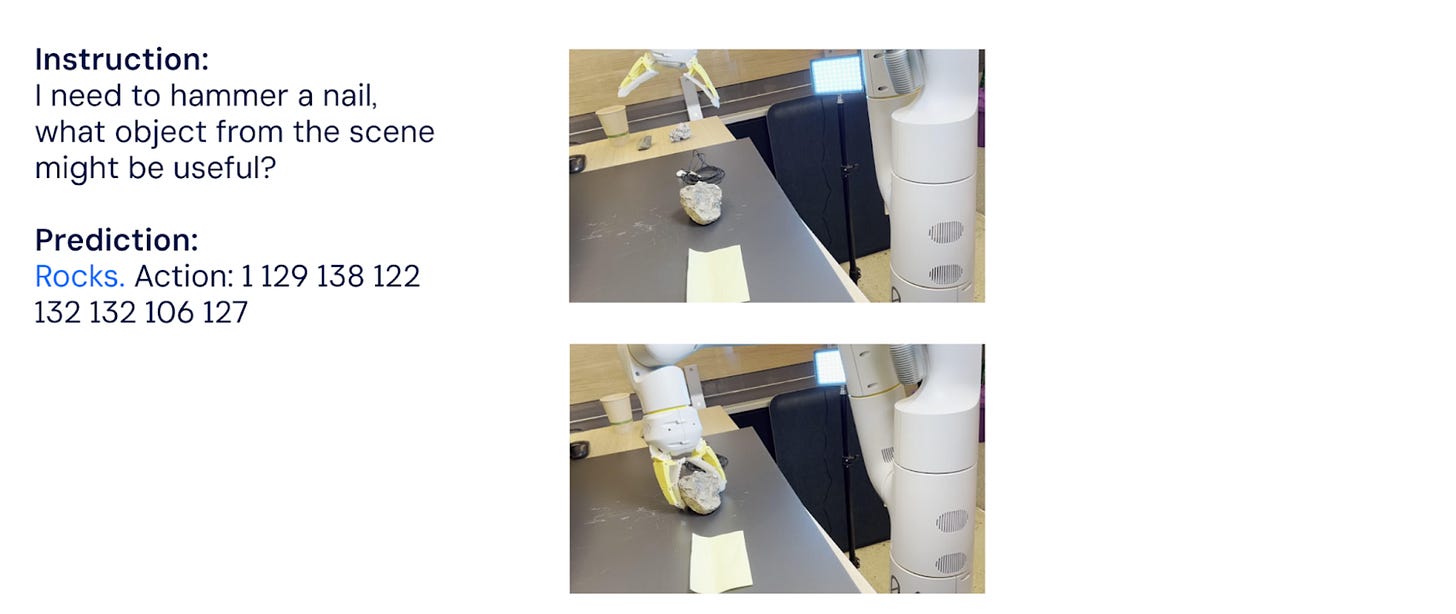

It can follow user commands by thinking things through. This means that it selects a relevant object category, comes up with descriptions and makes plans with several steps. Like, it can figure out what to use instead of a hammer (a rock):

Recent research brings us closer to useful robots. It's improving data collection, making robots faster and teaching them more general capabilities. Soon, with basic reasoning, robots might even create their own training data.

1.9 - Chapter 1 summary

AI is the next major platform shift and could solve many of our problems. We are already seeing its impact, but we are still a long way from the enormous growth it promises to bring to our economy. The capabilities of existing models held back much of a potential value.

The default way to get capabilities is through scaling: building one order of magnitude bigger model per training attempt. However, for complex tasks, brute-force training proves to be inefficient. To overcome this, researchers are testing sample-efficient methods:

Multimodality for processing and understanding images, audio and video.

Agency to learn autonomously in simulated environments.

Robotics to introduce additional modalities and make grounding more general.

This brings us to the next big question.

Chapter 2. What will it take to unlock the technologies of the future?

2.1 - A well-trained model can't do much if it doesn't know how to plan.

Ok, let's say we've made great progress in scaling and grounding. We have an AI that has a solid understanding of the world and is able to make accurate predictions about lots of stuff. But are we finished? Is there something more we might need?

Yes, something on top of this advanced model must learn to plan in order to reach its goals. Planning is about finding the best sequence of actions in a field of possibilities. To do this, you need:

Thinking about the future and deciding in advance what needs to be done

Simulate the results of your actions and line them up logically

Consider your resources and evaluate a final outcome

Make a decision about the best course of action

To make it simple: choose the right actions ➝ arrange them → evaluate how good it is➝ either make changes or decide it's good enough to go. This involves a lot of abstract thinking and mental simulation, e.g. knowing how one thing can lead to another or weighing up the trade-offs. This may seem too complex for machines and quite simple for humans. But is it really?

2.2 - DeepMind uses games to learn planning skills

The DeepMind team has a well-known passion for games and simulations. It is an environment with easily reproducible tasks, simple rules and a clear definition of winning. They are useful for experiments to better understand the advanced capabilities of intelligence.

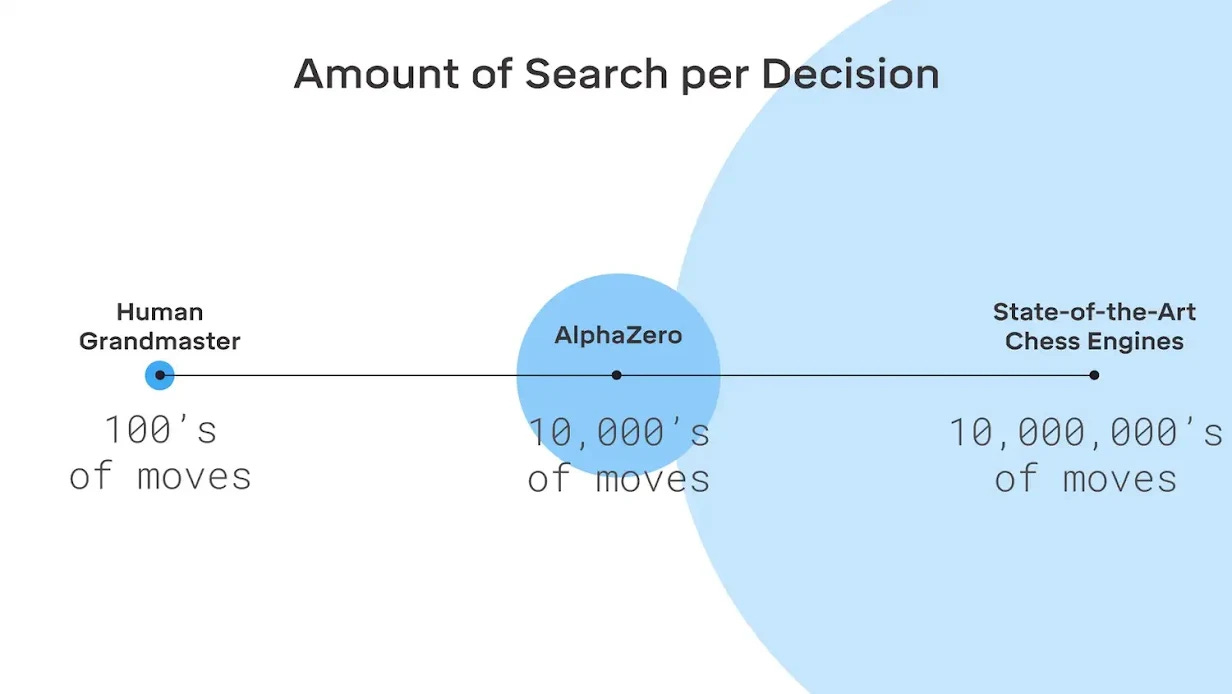

Sometimes you can replace planning by simply evaluating every possible outcome. Computers are good at this, but in certain cases you're doomed if you're not efficient. DeepMind chose the game of Go as an edge case of such a situation, where you can't fight complexity with the computational bruteforce. And trained a system that is surprisingly good at it.

They made a movie showing how AlphaGO defeats Lee Sedol — an apex human player with 18 international champion titles. The most interesting thing about the movie is to see how the Go experts and viewers go from thinking it's a total joke to being shocked at what the AI can do.

AlphaGo is fairly straightforward. It combines a few neural networks with a search algorithm that controls them. The policy network narrows down the most promising moves, and the value network judges the board positions. The search algorithm uses a technique called Monte Carlo Tree Search (MCTS) to decide which moves to look into.

This approach is quite different from the way people play Go. In the short term horizon, it is difficult for us to evaluate the position and determine who is winning. So players use their gut feeling and high-level principles that they've learned over years of practice. But AlphaGo can look at way more possible moves than humans typically do. Over time, it builds up little edges that we may not see right away. But as the game goes on, it snowballs on these small advantages and eventually wins.

2.3 - Planning has greatly improved in the next generation of the model

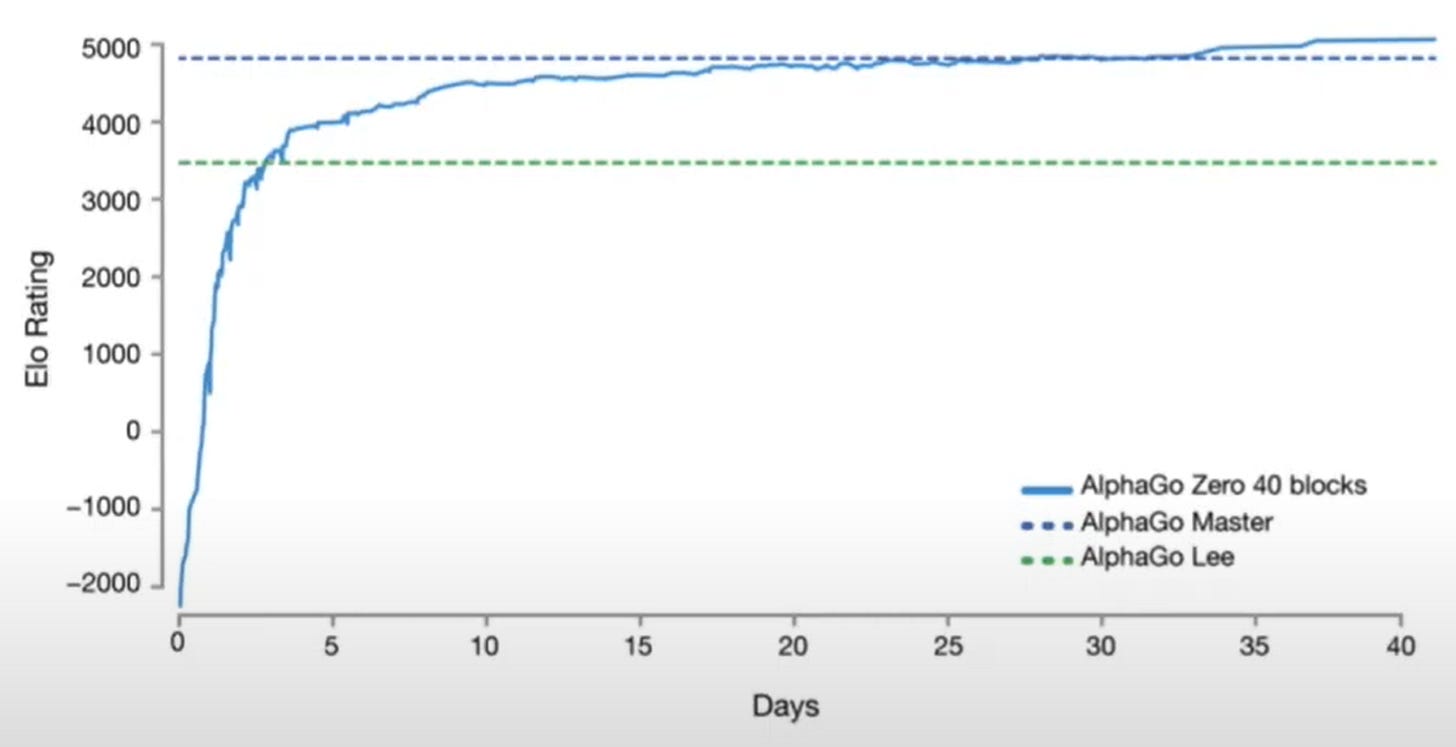

AlphaGo learned from expert humans (supervised learning) and by playing against itself (reinforcement learning). But AlphaGo Zero no longer used expert data, instead the model learned by playing against itself for over a month. It started with random moves and got better by learning from its wins and losses. They also improved the efficiency of the search tree by predicting which moves should be evaluated first.

The researchers tested the new model against an older version by playing 100 games, and it won every single one of them. The AI's skill went beyond what humans can do. So much so that Lee Sedol decided to retire. He said that no matter how hard he tried, there was still something unbeatable out there.

It mastered the entirety of human knowledge about playing Go and then threw it away. And guess what? There's more.

2.4 - DeepMind continues to experiment with planning

A few experiments were done to see how to make planning even more efficient. They trained a model called AlphaZero to play various games better than humans. It's a more generalized version of AlphaGo Zero and can learn any two-player game with perfect information. And it does so without using any human data.

Games like Go and Chess offer perfect modeling environments: you always know the next state after you make a move. MuZero, another system, can do a three search in less predictable environments. It has learned to play 50 Atari games without any prior knowledge, not even the rules.

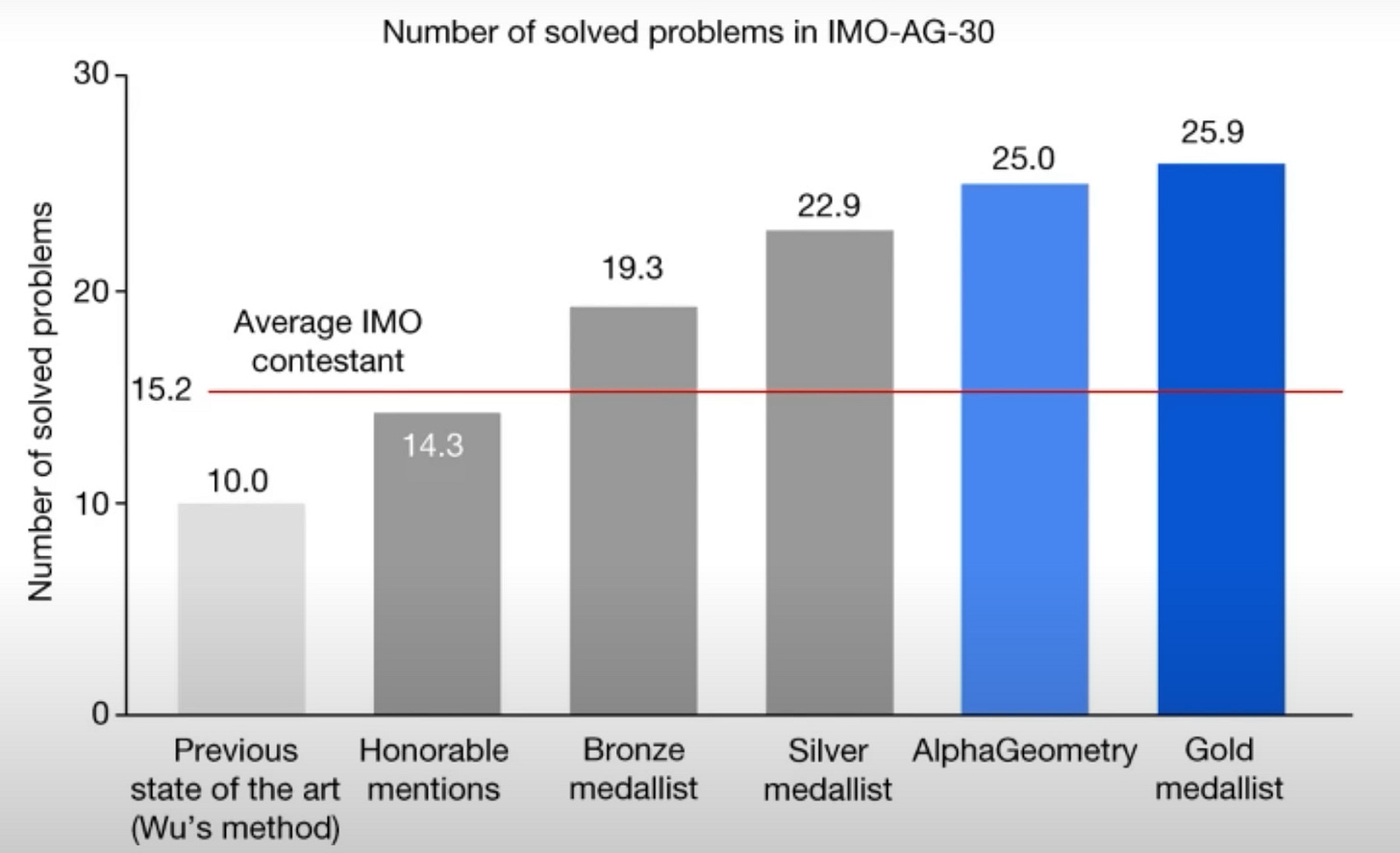

The last model I want to mention is AlphaGeometry. It scored almost perfectly in the International Mathematical Olympiad (IMO) in geometry.

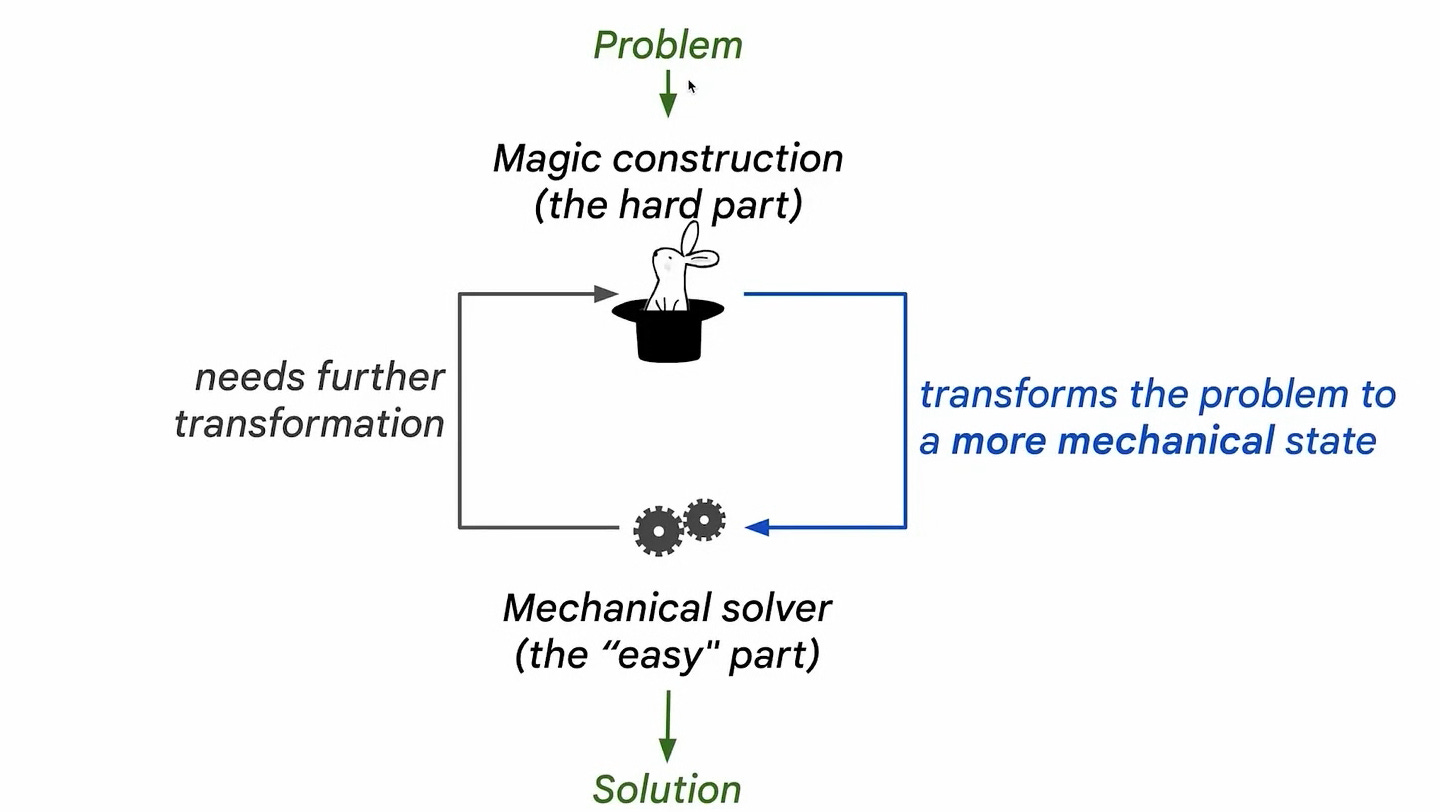

In geometry, it's easier to check if your solution works than to find it. Some geometry problems are so simple that a rule-based deduction engine can solve them mechanically. But for the harder problems, you have to come up with creative ideas first. Then you turn these ideas into simpler mechanical problems that you can solve step by step. So again, the model is a combination of idea generation and validation:

AlphaGeometry develops the same idea: brute-force search can be cost-efficient if done smartly. Humans are super sample-efficient because we are not built for Monte Carlo Tree Search. So we have to use our intuition to narrow down the search. This idea applies to models too — while search has computational limits, a good model reduces the need for extensive search. IMO's hardest problems often require proofs with 100+ steps. And yet a tiny model running on 2generation old GPUs can still handle them! Here is a great video about it.

Does this mean that planning, which helps models achieve complex goals, is the same as searching? I don't think so. Not all situations are like games or geometry, where it is easy to judge outcomes. The evaluation of actions and their results are often less obvious. Yet, AlphaGo has shown us that human knowledge, intuition and creativity are not divine. In some cases, good search and evaluation can handle planning. And for everything else, with ongoing research, solutions seem within reach.

2.5 - Can existing systems move science forward?

With such cool advances in planning, can't we start to capture some of the AI value I talked about in Chapter 1? Even small improvements can make a big difference at the scale of Google and put you in a strong position to get resources for future research. It's been done before: DeepMind joined Google 10 years ago and set up an AI to control cooling in data centers. This reduced the energy use by 30% for the whole infrastructure!

AI is a unique technology that boosts everything it touches, from cooling data centers to enhancing malaria vaccines. But to add value AI needs something to be applied to; and the best possible “something” is science. Scientific breakthroughs fuel economic growth by laying the foundation for tech innovations. They increase productivity, spawn new industries and improve lives through advancements like better healthcare.

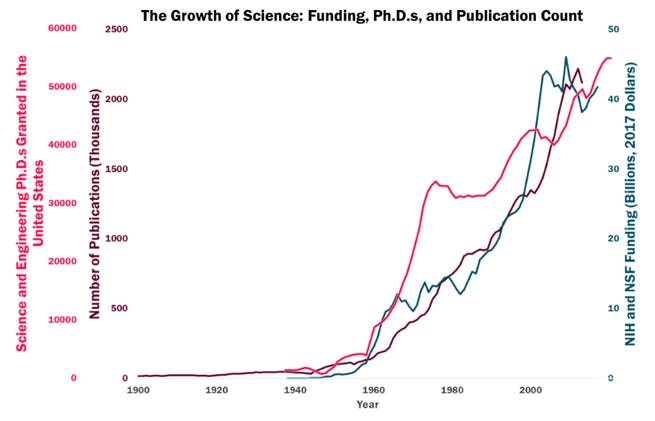

(Source: Patrick Collison and Michael Nielsen)

The cost of science has risen over the last 50 years and we're seeing fewer and fewer breakthroughs because new discoveries are increasingly difficult to find. Today, researchers must have deeper knowledge and work with larger teams to make progress. It seems we need the help of an AI Scientist to unlock the tech of the future, such as preventive medicine to eventually grow our economy tenfold:

We are now able to do part of the work of such a scientist: triaging a solution space by checking weak ideas and quickly discarding them. This helps save time and effort when it comes to finding a working solution, as with AlphaFold transition from discovery to engineering. But the real value lies elsewhere.

The hardest part of science is figuring out what questions to ask and how to define the problem. That seems even harder than just solving planning.

2.6 - What does an AGI recipe look like?

Demis thinks the ultimate general intelligence will have three main components:

A scaled large language model that automatically gets basic general capabilities.

A Grounding that helps the model develop an internal model of the real world.

A Planning that can make good guesses about the world to find better answers.

I believe it's not a complete list. Demis has focused on planning, but there are other important capabilities that a model needs to be truly intelligent. Here is Yann LeCun talking about this topic:

To make things clearer, there are four advanced capabilities: Grounding (making sense of the world by creating an internal model of the world), Planning, Memory, and Reasoning. We've covered Grounding and Planning before. Here's Sam Altman on the Lex Fridman podcast discussing Memory and Reasoning:

Memory allows the model to better grasp context, recognize complex patterns, follow instructions longer, and become more helpful as it works on a problem over time.

Reasoning or slow thinking helps when we find ourselves in new and difficult situations that require deep thinking and problem solving. It comes in handy when you realize that your first gut reaction isn't enough and you take time to think things through more carefully.

The recipe looks better now, but I'd like to add one more thing.

2.7 - Can we trust a model that is too complex to understand?

We cannot fully explain how the model works. Plus, new capabilities usually emerge unpredictably. So we must be extra cautious before relying on it with high-stakes problems. We can't test it the way we do a plane autopilot or a datacenter cooling system QA. Could a really smart system trick you? Maybe. At the moment we don't have good tools to spot that.

Demis talks about experimenting in a sandbox to create a narrow system that can detect lies. That's probably a step in the right direction, but it's still a complex problem with too many unknowns. AI Safety is a big field with many problems like this. It's definitely a critical fourth step in the three-part process of developing AGI. Here's a rather vague answer that Google is being bold and responsible, which seems like a clever way to dodge the real question:

He thinks we must be careful and humble with the transformative power of AGI. This means that we should predict outcomes using scientific method and not test dangerous things live on a large scale. He supports automated evaluation and early testing in simulated environments to fix dangerous capabilities before they cause harm.

However, there is a lack of a clear and well-explained safety strategy. Fortunately, Shane Legg (co-founder of DeepMind) and Demis are certain that these issues are serious. I believe Google isn't quite ready to speak openly about it yet. What we've seen so far is more of a PR Safety framework and not true AI Safety.

2.8 - Chapter 2 summary

To unlock the tech of the future, we need AI that can assist us in science. Tenfold economic growth depends on how fast we can make important scientific discoveries. Without AI, it will be increasingly difficult to keep up the pace.

DeepMind's work shows it is great at planning with smart search and evaluation methods. But the hardest part of science — is asking the right questions and formulating problems. This requires systems that are even more capable.

On top of a scaled large language model, AGI needs other components: It must have Grounding to grasp the real world, Planning to achieve its goals, Memory to learn over time, and Reasoning for slow thinking when necessary. Lastly, we still need a safety strategy for this kind of model.

Chapter 3. How can you stay on top of things and not go crazy?

3.1 - We now live in a very intenese world

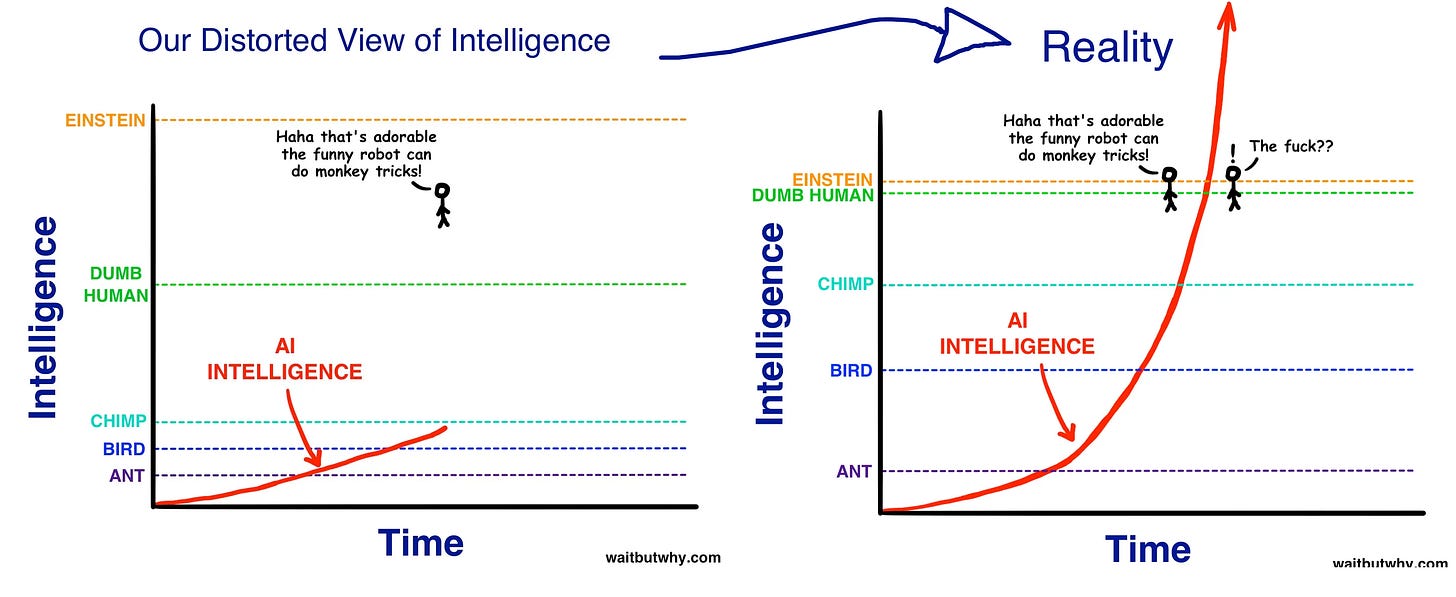

Almost a decade ago, Tim Urban wrote a cool longread about AI. Tim is known for his excellent explanations and stick figure drawings. He starts the article with a quote from an essay by Vernor Vinge written 30 years earlier:

We are on the edge of change comparable to the rise of human life on Earth.

Then he asks a follow-up question: What does it feel like to stand here?

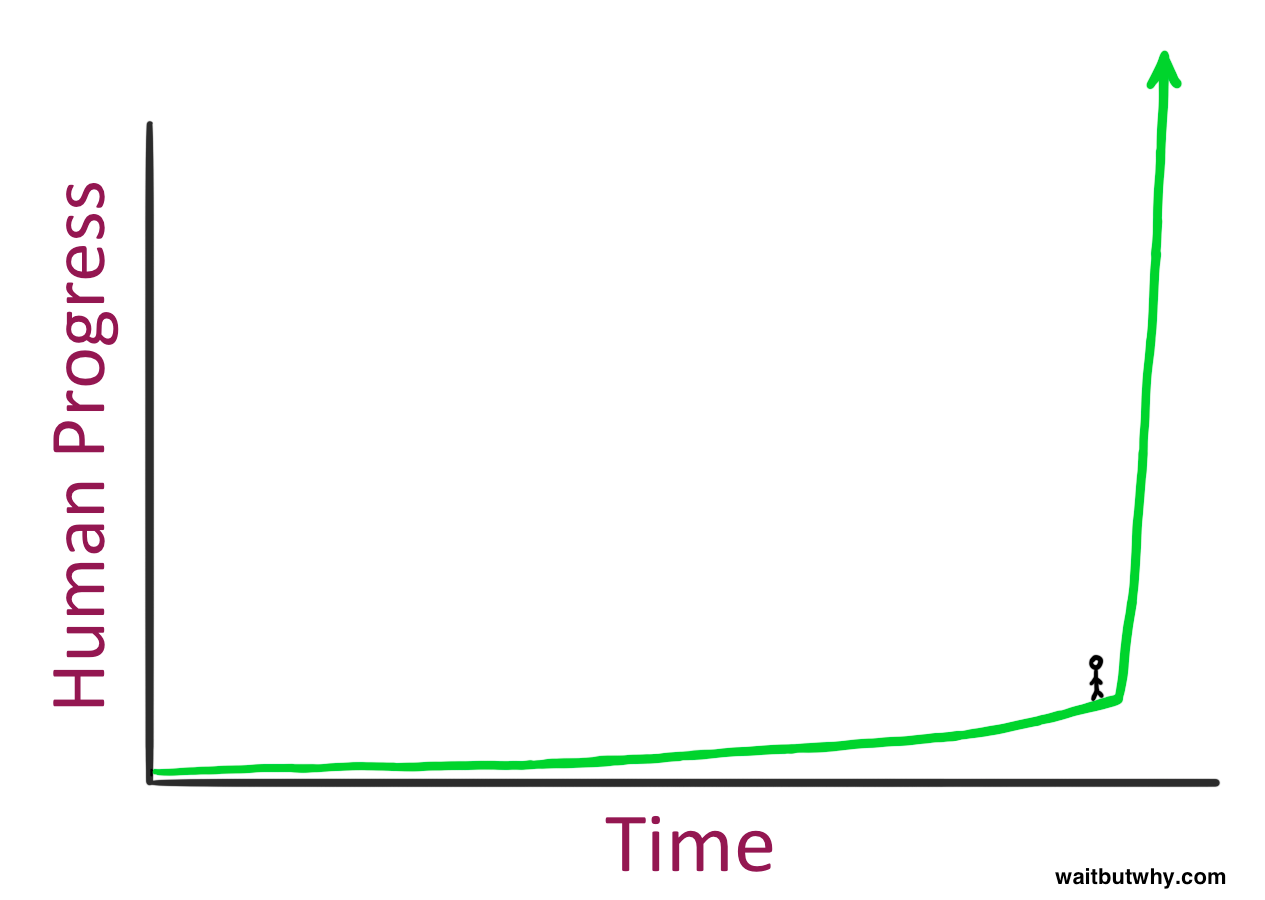

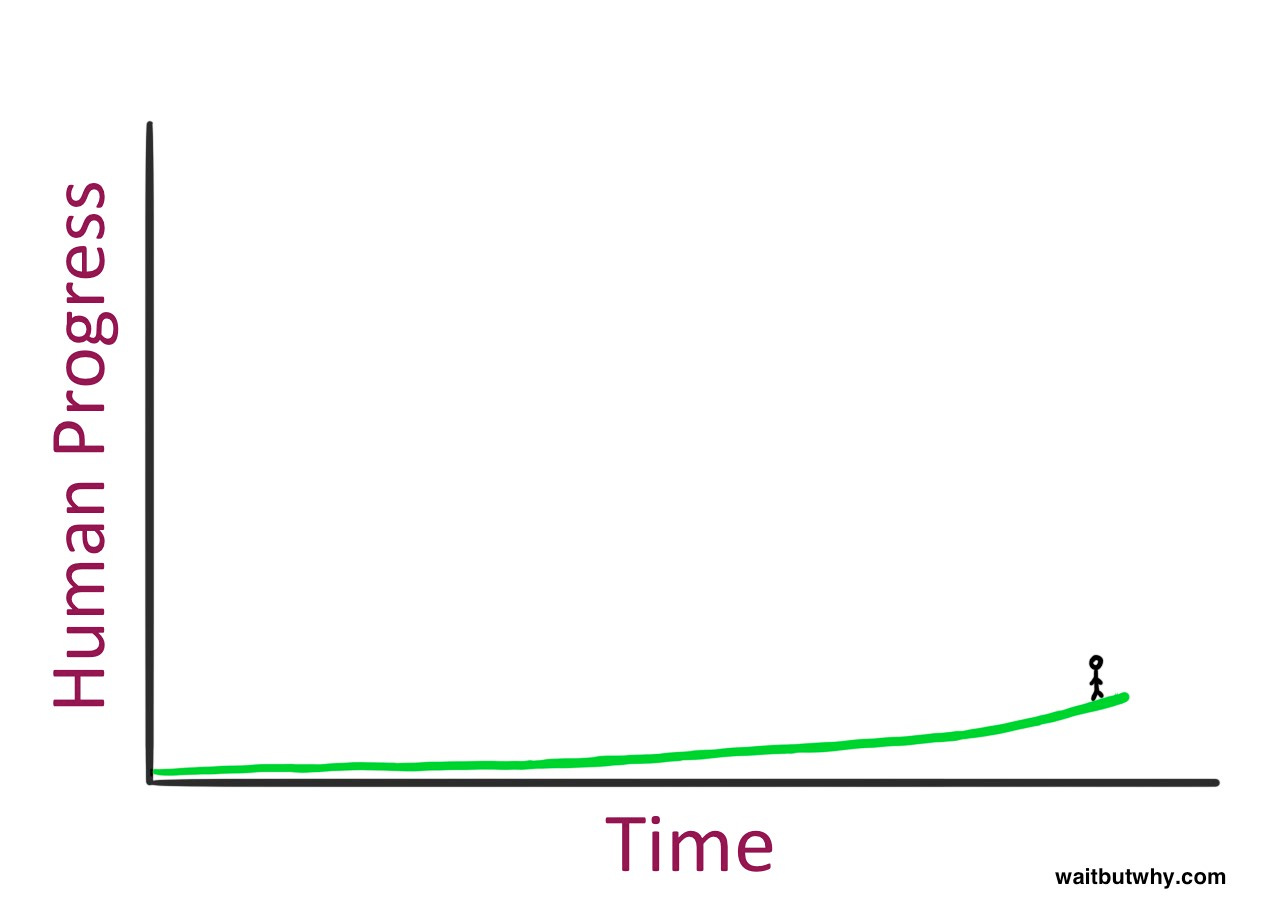

It seems like a pretty intense place to be standing—but then you have to remember something about what it’s like to stand on a time graph: you can’t see what’s to your right. So here’s how it actually feels to stand there:

Which probably feels pretty normal…

Tim points out that when you live in a particular moment in history, the progress around you seems ordinary. Only in hindsight you realize how fast things are changing compared to the past. He is setting up the idea that we're in an era of surprisingly rapid tech advancements and may not see it because we're right in the midst of it all. Plus, things are speeding up even more — we could witness bigger changes soon.

But that was 10 years ago. Now we can see and feel the progress:

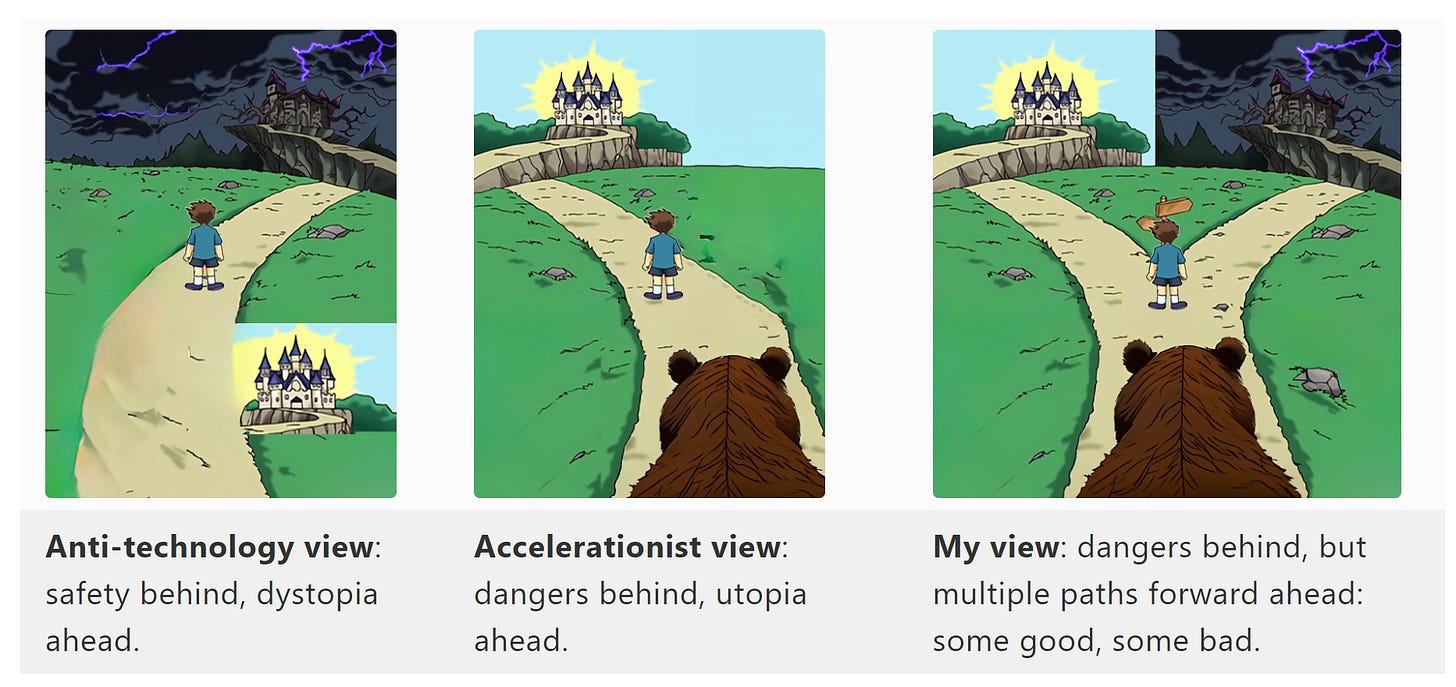

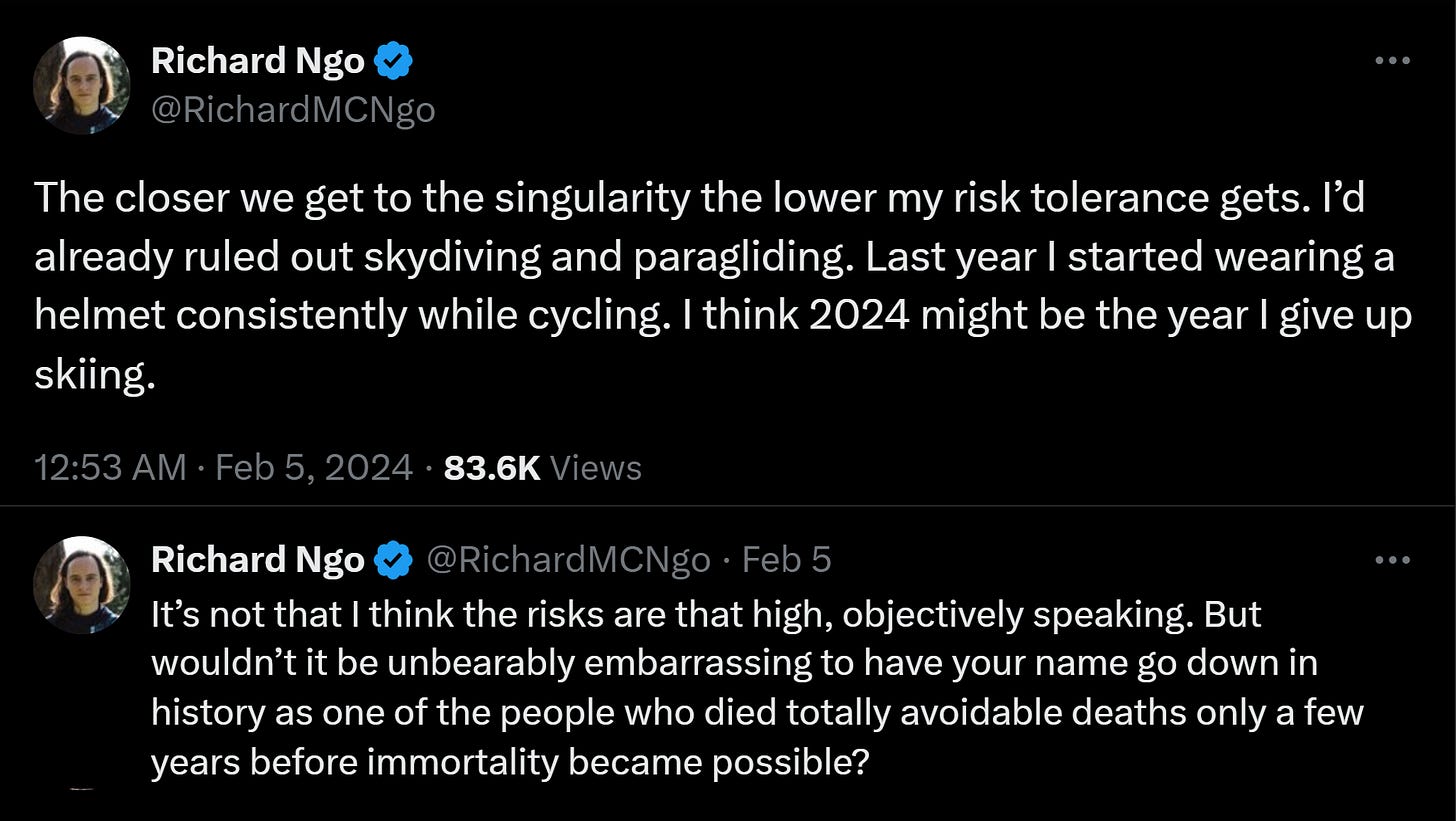

Planning life in this kind of world feels odd — it's a paralyzing anticipation of big changes to come. Which can lead to utopia or extinction, as Vitalik Buterin pictured:

3.2 - There have been periods like this before in history — what can we learn from them?

More from Tim citing someone famous:

This pattern—human progress moving quicker and quicker as time goes on—is what futurist Ray Kurzweil calls human history’s Law of Accelerating Returns. This happens because more advanced societies have the ability to progress at a faster rate than less advanced societies—because they’re more advanced.

In the course of history, we've seen three major periods in which tech progress has accelerated dramatically. They made the advances of previous eras seem insignificant in comparison. These "revolutions" fundamentally changed how we live, work and interact with everything around us.

1. Vernor Vinge speaks of the Cognitive Revolution in early human history. It happened between 70,000 and 30,000 years ago, when our ancestors developed language and abstract thinking. Before that, life was all about survival. But after, we began to accumulate knowledge and make complex tools. This sped up progress and set us on a new civilizational trajectory, splitting our history into a "before" and an "after".

2. About 12,000 years ago, the Agrarian Revolution began to spread. 5,000 years ago, agriculture had become the way of life for most people. Before that, life was a constant hunt for food. But with farming, we grew our own food, settled down in one place and began to build cities. We left behind the exciting but risky life of hunter-gatherers for a faster pace and a new shift in trajectory. This change brought its own set of problems: massive population growth, slavery, epidemics, harsh rulers and less power for women.

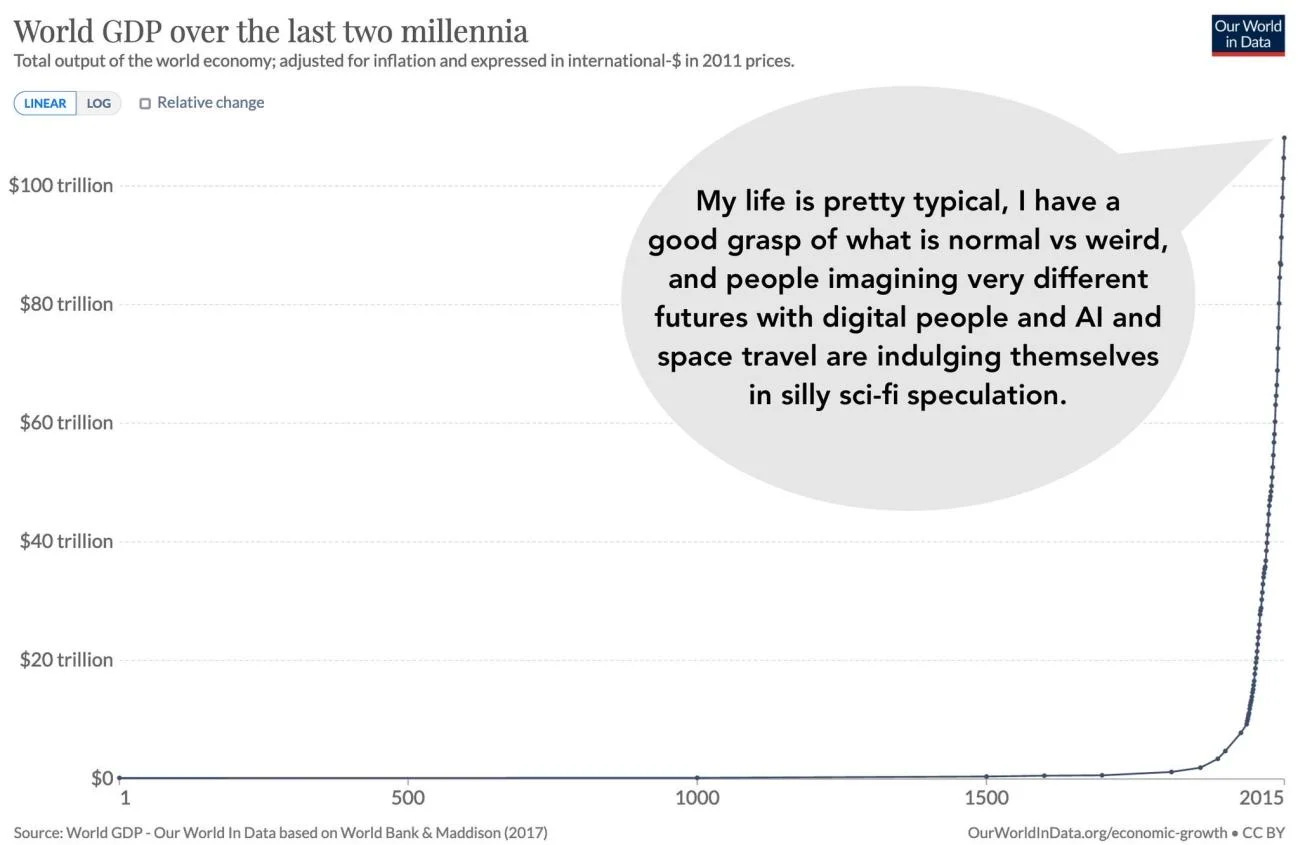

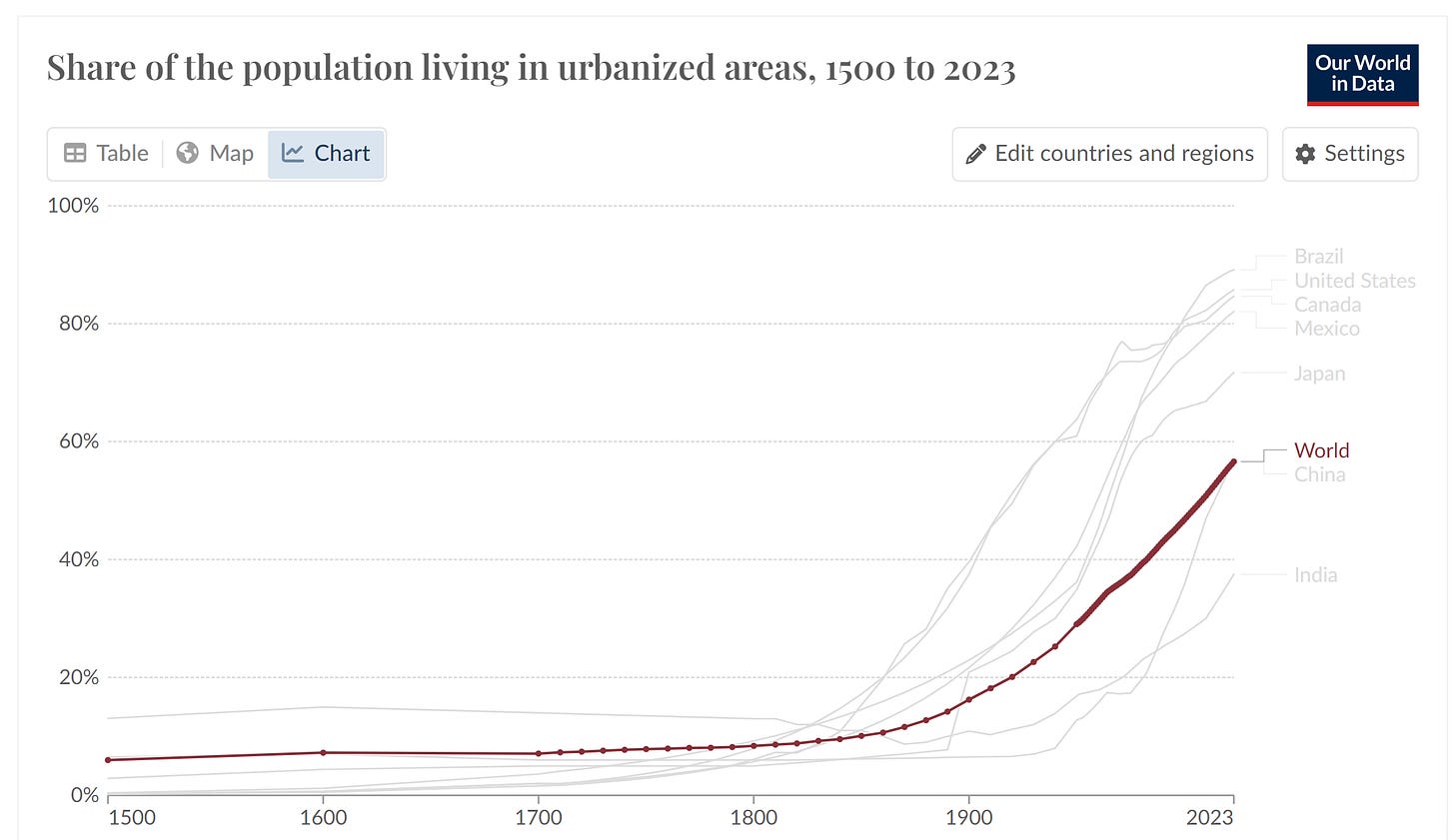

3. We have most of the data on the Industrial Revolution — finally I can share some graphs with you! It started in Britain around 1760 and spread across Europe and North America over the next hundred years. Life was pretty rough before the steam engine and the railroad came along. But after the Industrial Revolution, most of the things we care about improved enormously. We lived longer, earned more money and discovered the magic of technology.

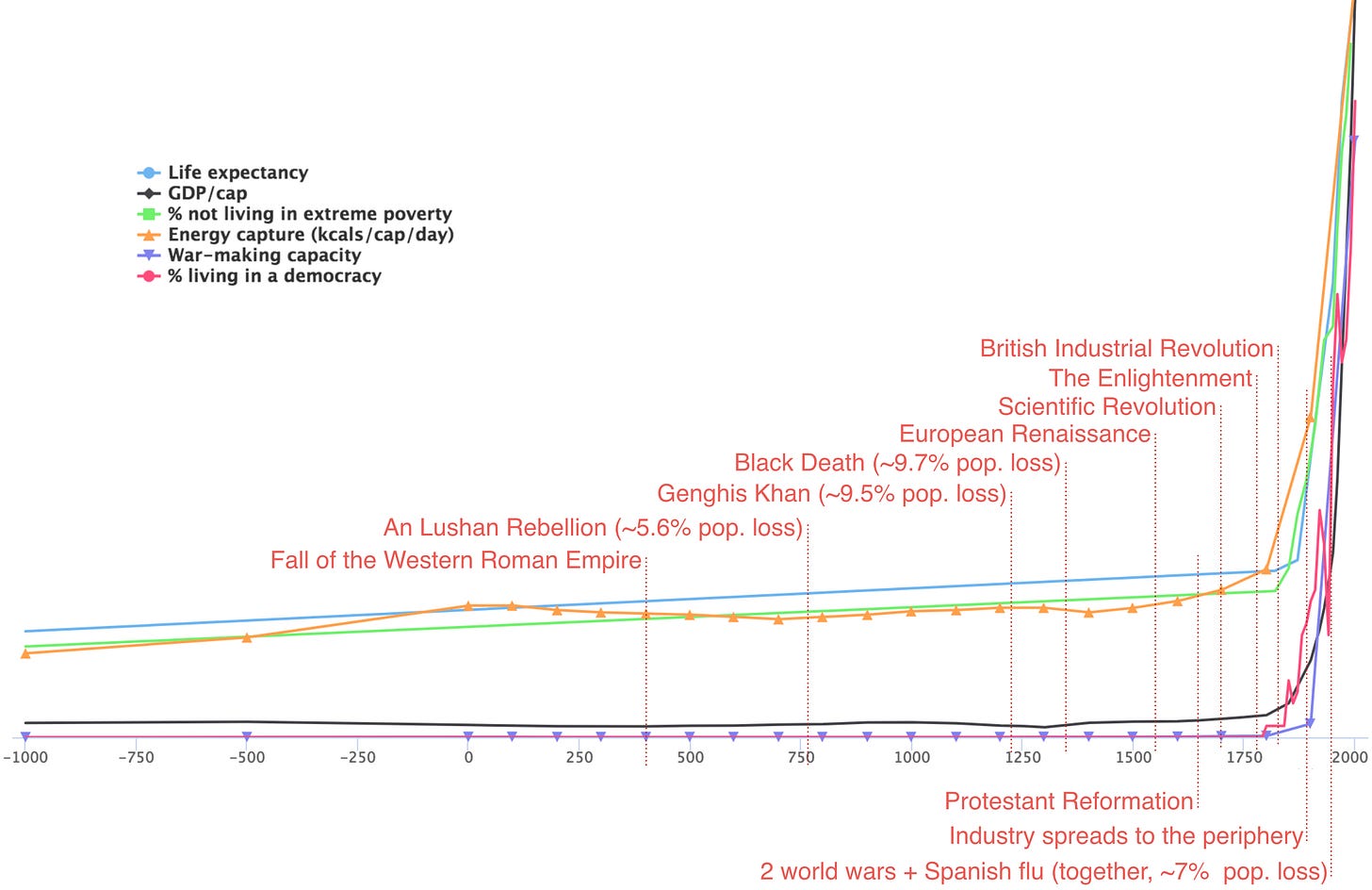

You can see a pattern in these three examples: things were bad for a very long time, then a revolution sparked an improved "after." let's look at Luke Muehlhauser's incredible research. He draws six well-being metrics over 3000 years of civilization and some major historical events into one picture:

This is not the impression of history I got from the world history books I read in school. Those books tended to go on at length about the transformative impact of the wheel or writing or money or cavalry, or the conquering of this society by that other society, or the rise of this or that religion, or the disintegration of the Western Roman Empire, or the Black Death, or the Protestant Reformation, or the Scientific Revolution.

But they could have ended each of those chapters by saying “Despite these developments, global human well-being remained roughly the same as it had been for millennia, by every measure we have access to”.

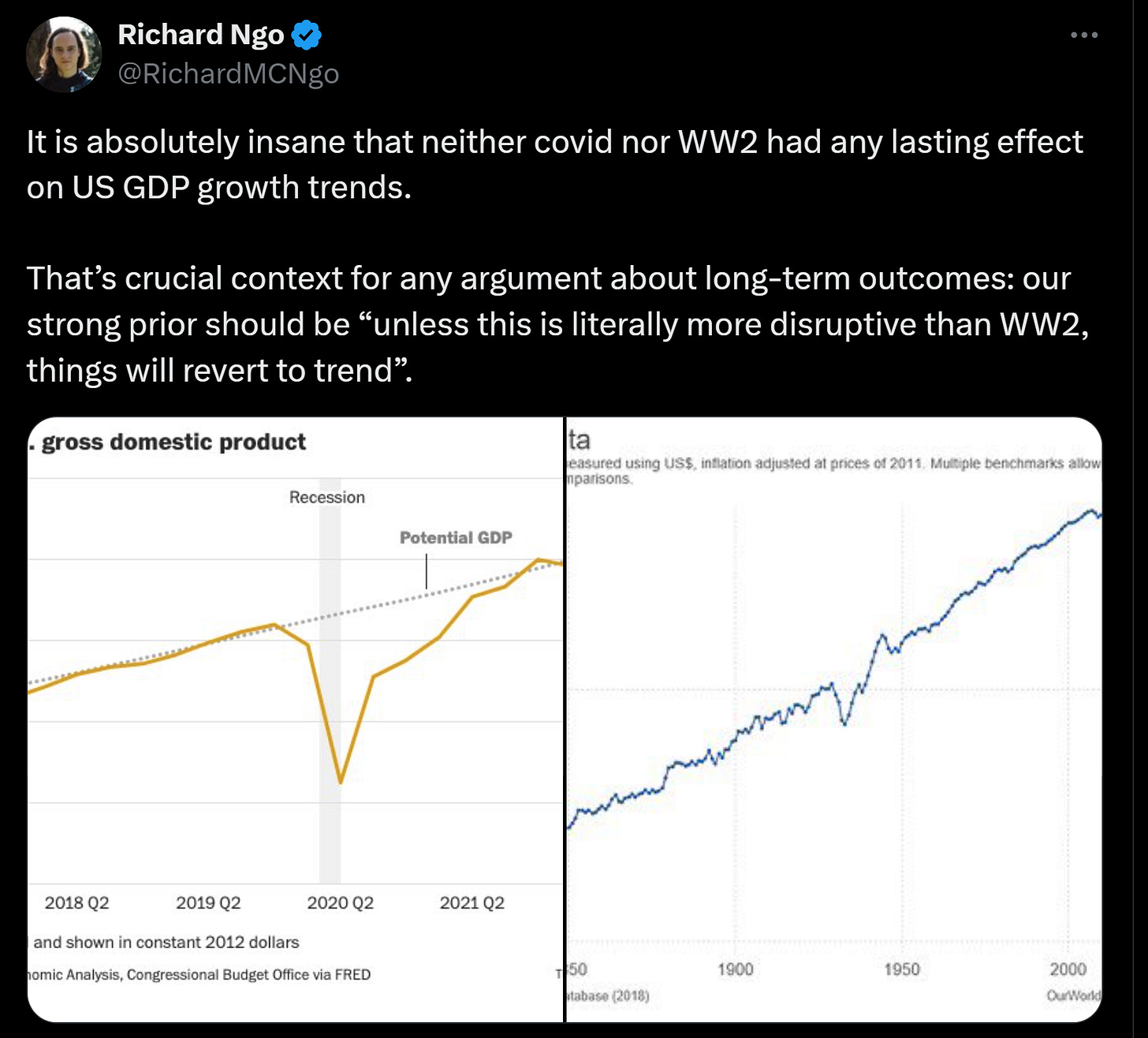

It's not that the events in Luke’s quote weren't a big deal at the time — they were. But even the worst events, like the Black Death and Genghis Khan, killed about 10% of the world's population each. But they didn't knock civilization off its positive path. World War I and the Spanish Flu combined killed 4.1%. Yet, as you can see, these were only brief disruptions, with no lasting bend in the curve. It's as if some gods of straight lines keep putting things back on track.

(this idea comes from Scott Alexander and Richard Ngo)

In hindsight, the best day is always tomorrow, and yesterday doesn't matter. There has never been a reverse revolution that has slowed the pace. But there have been three normal revolutions that are really important for our progress.

3.3 - What can we expect this time and how is the new period different?

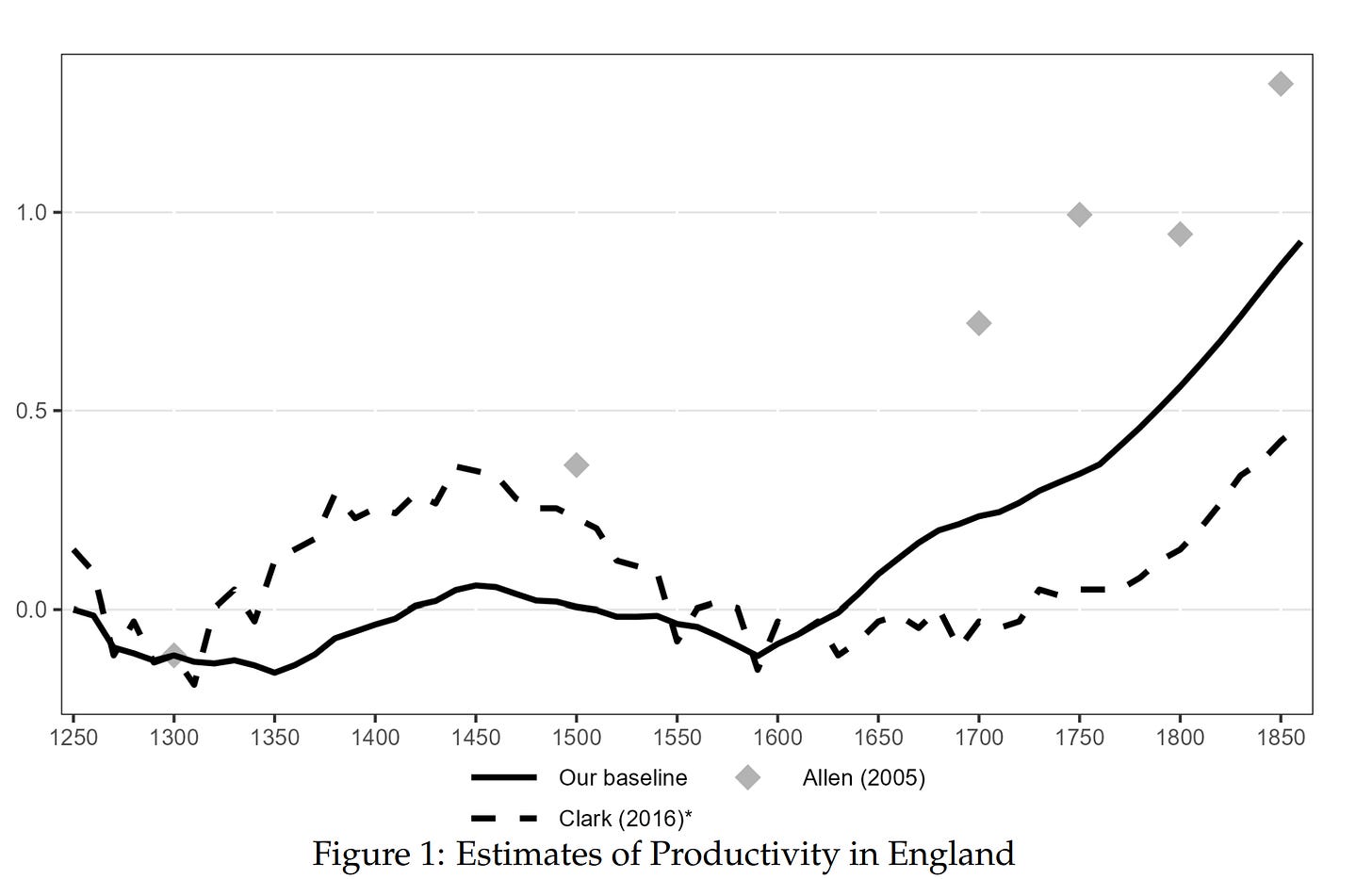

We are at the start of a new curve-bending period — the Intelligence Revolution. Once again, there seems to be a division between what was before and what will come after. Luke mentions in his article that a major reason for the rapid growth during the Industrial era was a significant rise in productivity. Check out this interesting chart from a 2023 study by Paul Bouscasse, Emi Nakamura and Jon Steinsson. It illustrates the changes in England during the Industrial Revolution:

They compared their study with two others to better understand when it all began. Look at the solid line, which shows that the productivity of the workforce doubled within 200 years. Then, veeery slowly, Ray Kurzweil's Law of Accelerating Returns gains power to change lives around the world:

The link between productivity and economy is quite fascinating. Check out this pattern: Industrial Revolution → Steady linear productivity growth → A bend in the trajectory → Rapid exponential economic growth. Basically, even a linear rise in productivity can cause exponential economic growth. But what if things change a little this time?

What if this time the Intelligence Revolution leads to exponential productivity growth? At some point, a chain of breakthroughs would allow us to create a Narrow AI whose only goal is self-improvement. If it can tweak its own code to get even better at tweaking its code, we have what is known as recursive self-improvement (RSI). This loop could theoretically lead to an exponential takeoff of intelligence and productivity.

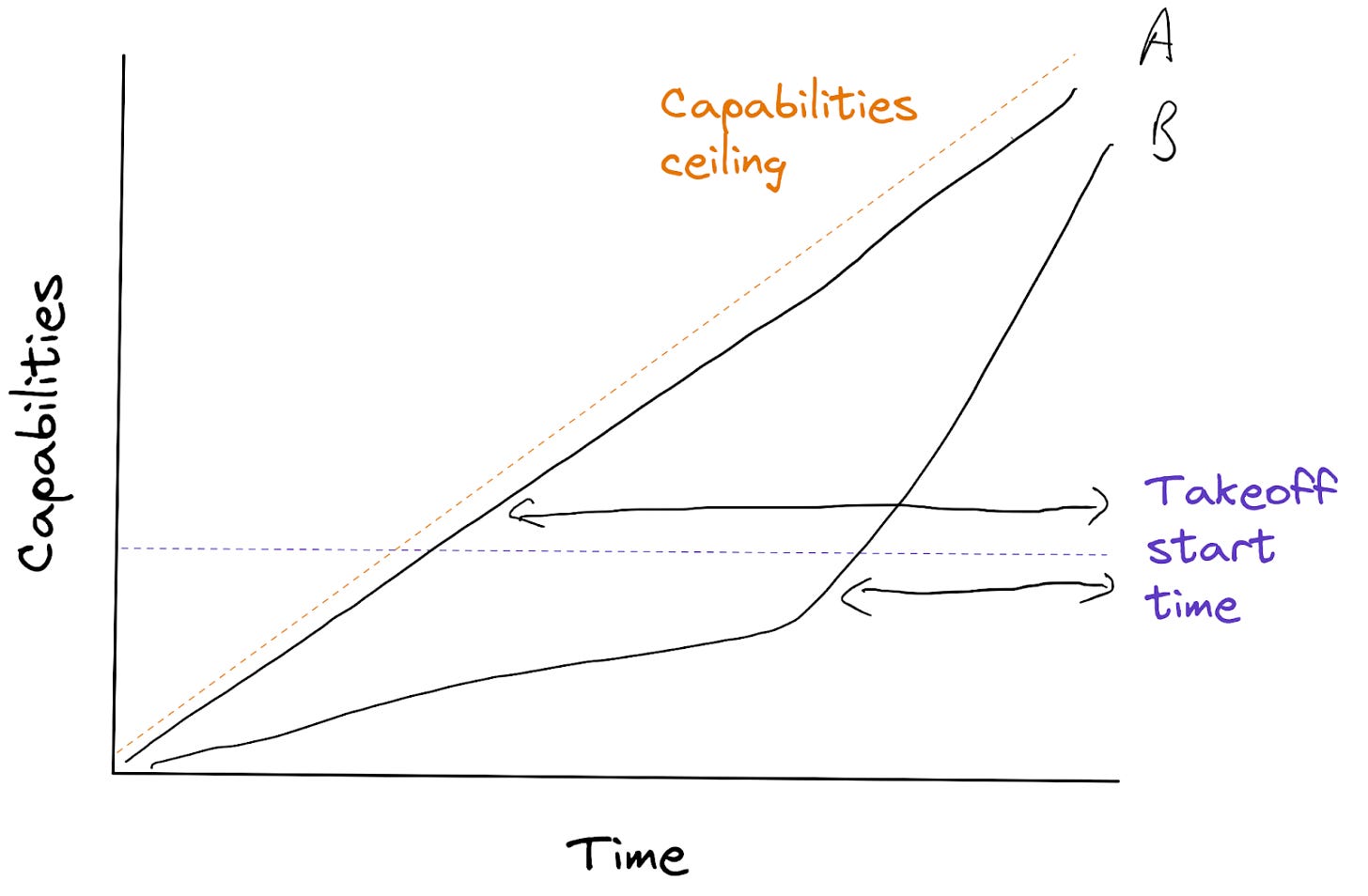

This idea started the AI Takeoff debates, exploring the process of a Narrow AI transitioning to AGI and then to ASI (aka Superintelligence or Singularity). It's all about guessing what our future might look like. And guess what? There are many possible ways to continue this image:

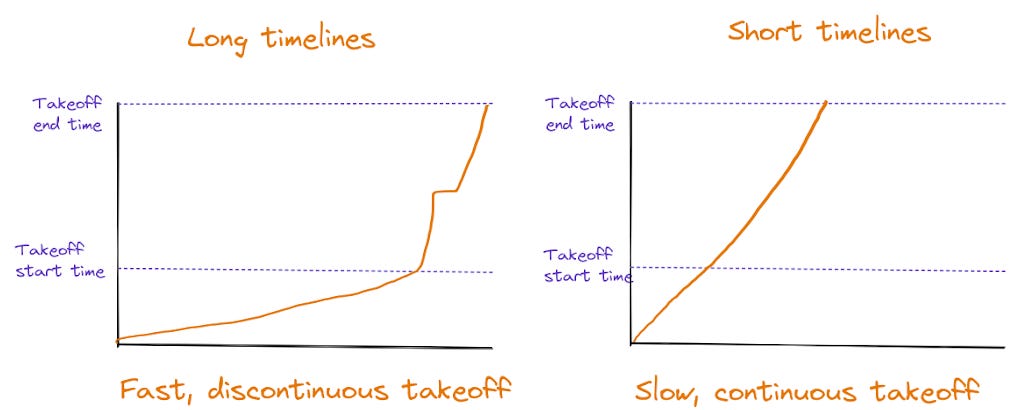

AI takeoff discussions focus on its timing, the pace of AI development and whether it'll be many small steps or a few big leaps. Here is a helpful overview by Rose Hadshar and Alex Lintz that presents 8 scenarios. I'll highlight just two for the sake of simplicity:

Fast Takeoff, also known as FOOM, is a scenario where there are quick jumps in key capabilities. Initially, these jumps may not have much impact on the economy. But then, within hours or days, everything changes and we find ourselves in a new world. This situation has a strong "winner-takes-all" dynamic, and once it starts, there is hardly any time to react.

This idea points to a future that seems more like something out of a sci-fi movie than reality. Yet, people like Nick Bostrom, Eliezer Yudkowsky and Nate Soares believe it could happen. And they argue that we should get ready for it. In his article, Tim Urban shows how we could miss FOOM sneaking up on us:

The Slow Takeoff suggests the move toward powerful AI systems will be steady. But even less advanced AI systems will still make a big difference in the world. Each improvement will predictably boost capabilities a little and come out as updates to current products.

"Slow" may not mean what you think it means. It now takes about 16 years for the global economy to double. But the slow scenario suggests that the time it takes to double will fall sharply even before the AGI. First the economy will double in just 4 years, then in just 1 year. And all this before AGI, with real products driving growth! People like Paul Christiano, Robin Hanson and Ray Kurzweil support this idea.

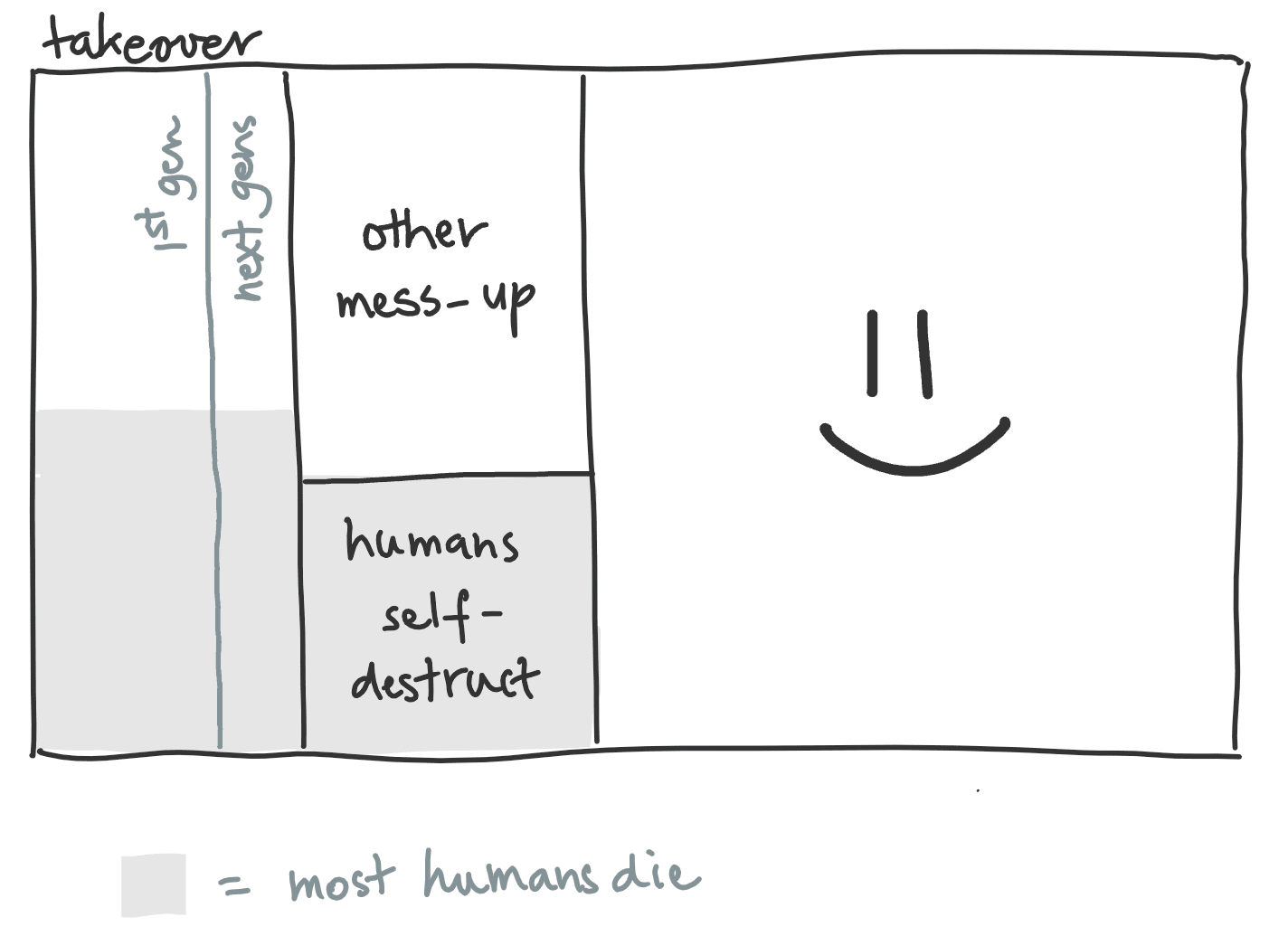

But a Slow takeoff doesn't mean it's safe. Paul led the alignment team at OpenAI and was one of the authors of RLFH. He hasn't said it directly, but I think his views reflect to some extent the views of many people at OpenAI, Meta and DeepMind. And you can see Paul's estimates of the risk of Doom are pretty high:

(image by Victor Lecomte, with squares shows the percentage of different outcomes)

The discussion between Fast vs Slow is a pretty difficult topic. Eliezer and Paul had a ~50,000 word conversation and still kept their positions. They interpret the evidence differently based on their perspectives. Christiano suggested some measurable benchmarks, while Yudkowsky focused more on qualitative milestones. They both trust their views of the world after thinking about these issues for years.

Since there is no solid evidence to support one view over the other, both positions remain legitimate. Though Paul is unofficially "represents" the practitioners.

3.4 - What is the current plan so far?

Over the last ten years we have learned something important from Richard Sutton's "Bitter Lesson". Transformers and image generation models have shown us that neural networks work much better than we thought. DeepMind's AlphaZero was a real eye-opener here — these systems can already outperform humans in certain tasks. So Recursive Self-Improvement may not be the main thing — it's seen as just one part of a larger, more complicated idea. Here is what Paul says:

I expect successful AI-automating-AI to look more like AI systems doing programming or ML research, or other tasks that humans do.

I think they are likely to do this in a relatively "dumb" way (by trying lots of things, taking small steps, etc.) compared to humans.

But that the activity will look basically similar and will lean heavily on oversight and imitation of humans rather than being learned de novo. Performing large searches is the main way in which it will look particularly unhuman, but probably the individual steps will still look like human intuitive guesses rather than something alien.

This leads us to a practical conclusion: AI labs think it's safer to deploy powerful AI than to leave it unused. That's our current strategy for Takeoff.

The plan is to roll out the technology incrementally, in small steps as soon as it's ready and we have taken the necessary safety precautions. This will avoid a compute overhang, reduce the likelihood of sudden changes and give society more time to create rules and adapt. Here's Sam Altman confirming this approach:

In the meantime, AI assistants can bootstrap the process of solving the alignment problem. To be practical, the alignment work needs to be done with powerful systems. In theory, we only need Narrow AI for this research, since evaluating the research is easier than doing it. Think about how AlphaZero's smart search gives it an edge:

With this edge, we can guide new models with older ones until we achieve AGI. And once it's clear that the systems have become dangerous, people can work together to slow progress until we've solved alignment. That's the plan as I understand it. However, I'm oversimplifying and leaving out details. There is much more context in Nate Soares' explanation and Rose and Alex's post.

Takeaways

I hear this concept a lot from Demis Hassabis, Sam Altman, and Mark Zuckerberg in interviews. While I'm not sure it's a great idea, it seems like they're giving it some thought and not just blindly rushing into the abyss.

Every morning I wake up with the feeling that something unknown is coming. The scale of the future makes the past irrelevant. The news I follow leaves me with the impression of being overwhelmed by progress. There is more stuff to read than you have time. But following people like Demis is still useful to understand the big picture. They will help you distinguish important information from hype.

Uhhh wait, I haven't read your previous 2 pieces!

I started this story with a promise to show you how Big Tech thinks. So here it is: AGI is important, but we need more Scaling, better Grounding, advanced Capabilities, and to solve Safety.

Scaling speed is limited by technological complexity, so expect large models to grow by an order of magnitude per version. Pay attention to what new basic capabilities they get.

Models learn better the better they are grounded. So expect Multimodality and Robotics to evolve. Pay attention to news about Agency and Self-play.

Smarter models will need advanced capabilities like Planning, Search, Reasoning, and Long-term Memory. Pay attention to the "Search for a better Plan" capability and the model's ability to stick to the plan.

The problem of coexisting with a model that is too smart is still unsolved. Pay attention to AI lab safety frameworks updates and a progress in model evaluation.

Alright, if you're feeling overwhelmed by the media, this recipe should help. Just focus on these four things. And one last tip before I go:

Take good care of your health and don't take unnecessary risks!

Hi! Thx U for the article 🔥

Wow! thank you for the artilce